Your inbox overflows while vendors promise their agentic AI will handle everything autonomously. The pressure to integrate this technology feels overwhelming, yet the journey to implementation carries significant risks. These autonomous systems can become legal liabilities, create operational chaos, and generate hidden expenses that upend careers and cripple businesses.

Intelligence amplification offers a compelling alternative. By blending AI's analytical capabilities with human oversight, this approach minimizes threats while unlocking substantial benefits.

The cracks are already showing

Agentic AI promises autonomy but delivers liability, chaos, and cost. 82% of organizations fear their automation will tip them into digital chaos as business process complexity increases. Most companies juggle dozens of endpoints for a single process, and every new agent widens the blast radius. One misstep triggers compliance failures, reputational damage, and infrastructure bills nobody budgeted for.

Shadow projects make everything worse. We recently heard from a CIO who discovered teams piping customer data into public AI tools just to hit deadlines. During an internal audit, he found an entire unsanctioned bot farm running on personal laptops. When governance lags behind ambition, rogue agents multiply. Another global enterprise tried banning generative AI completely without offering safe alternatives, so employees simply routed tasks through personal accounts. Within weeks, sensitive data leaked and compliance teams entered crisis mode.

What vendors hide behind the pitch

Vendors promise minimal oversight, yet the reality involves dedicated staff for prompt engineering, exception handling, and fixing errors. True autonomy demands continuous human involvement, despite all the marketing claims. Those seamless integration promises translate to months of costly work and data cleaning, with the cost iceberg hiding beneath every pilot project becoming apparent only after contracts are signed.

The autonomy illusion runs deeper than most realize. These systems require constant oversight for prompts, exception handling, and decision validation, which increases organizational burden rather than reducing it. Companies deploying AI agents retain responsibility for these agents' actions under agency law principles. Organizations become liable for any legal missteps including intellectual property violations or privacy law breaches.

Consider what happens at scale. An AI system making 10,000 decisions daily with 99.9% accuracy still results in 10 critical errors every day. Since autonomous systems act continuously without pausing for human review, those errors compound. When decisions emerge from black boxes, explaining them to regulators or courts becomes impossible, yet you remain legally responsible for every outcome.

Hidden costs typically exceed visible ones by five to ten times once you scale beyond proof of concept. Licensing fees represent just the beginning. Infrastructure demands balloon when large language models burn through GPU hours. Human oversight requires AI ethicists, prompt engineers, and process architects. Error remediation creates ongoing costs through customer support, refunds, and incident triage. Legal expenses mount through audits and outside counsel since you own every action the agent takes.

Understanding the risk spectrum

Autonomy operates on a sliding scale where higher stakes demand tighter control. Some decisions carry minimal risk and work well with human approval. AI-drafted emails where you still review and send through Write with AI, calendar coordination, or pulling basic data for internal dashboards need clear scope, instant human review, and simple rollback options.

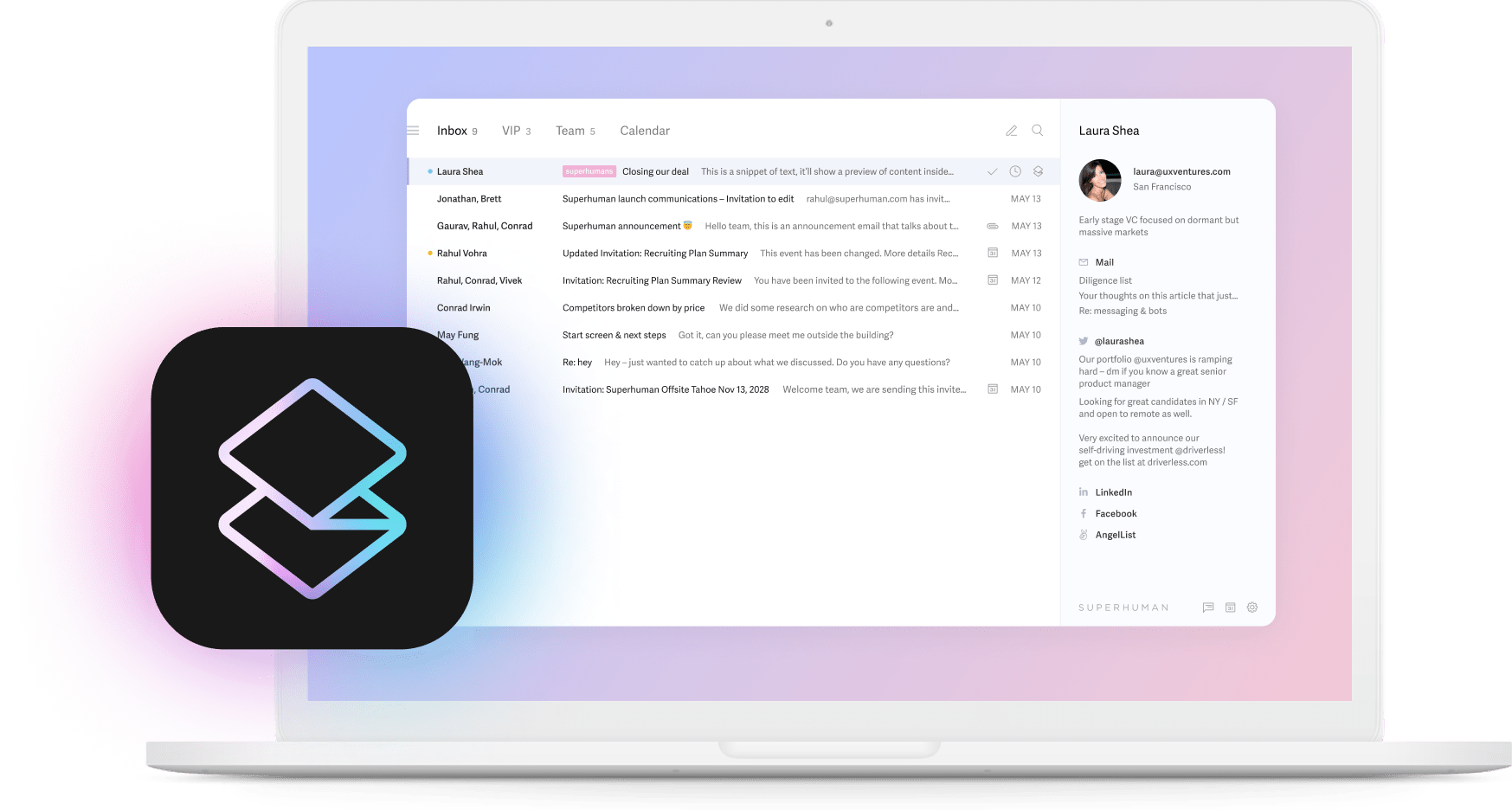

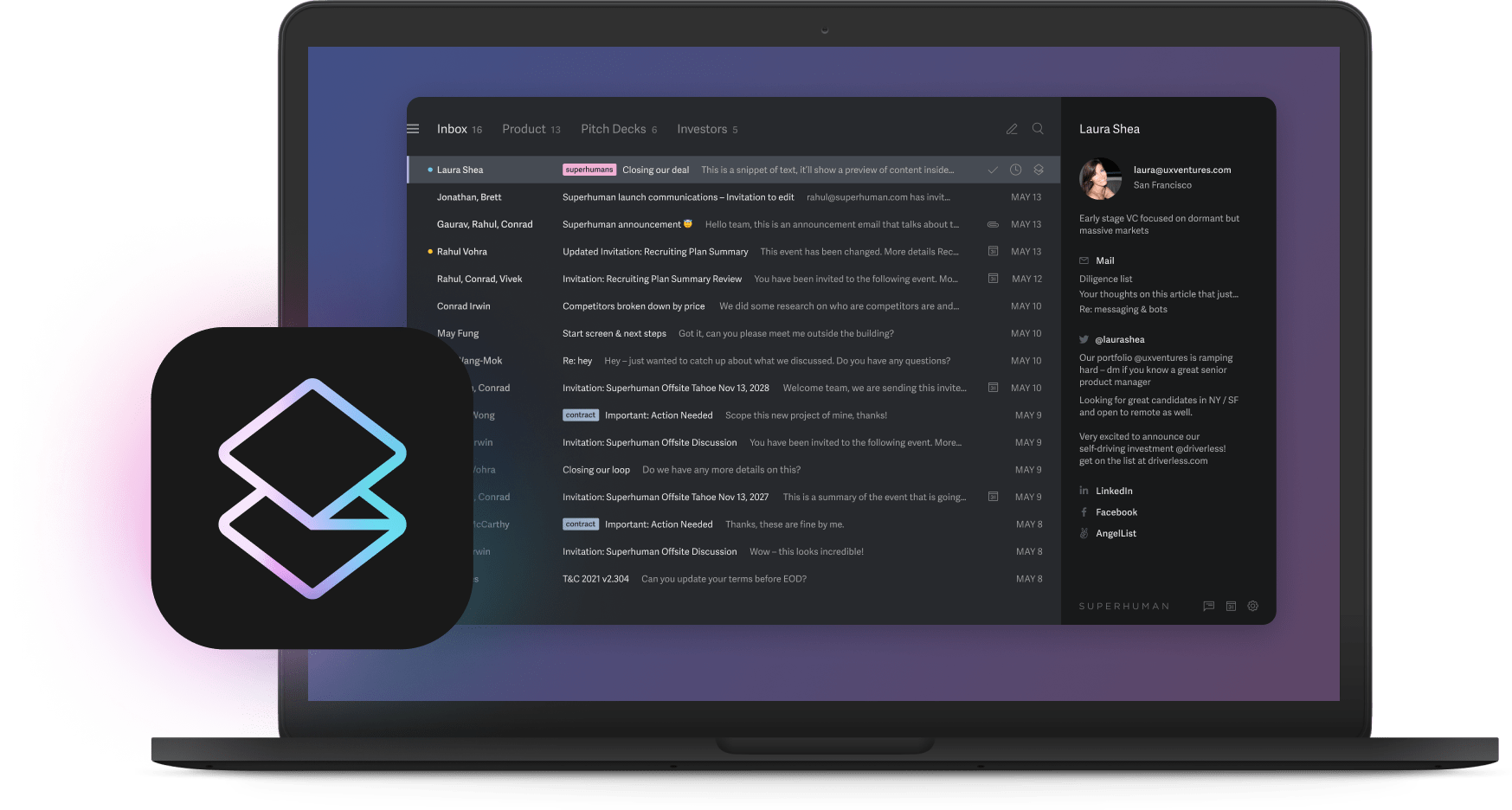

Medium-risk scenarios require stronger guardrails but remain workable. Customer chatbots that escalate to humans after specific triggers, document summaries with expert sign-off, and routine reports flagging anomalies need real-time monitoring and hard limits on spending or data access. With Split Inbox automatically prioritizing what matters most, you maintain oversight while AI assists with organization.

High-risk decisions should never run autonomously. Final legal rulings, high-value trades, medical diagnoses, and hiring decisions must keep AI as adviser only. When lives, money, or reputations hang in the balance, those ten daily errors from our earlier example become career-ending disasters. Risk climbs exponentially with decision importance, regulatory scrutiny, and potential harm.

Safe experimentation without the disaster

If you must experiment with autonomous AI, follow three core principles that protect your organization while you learn.

First, establish comprehensive legal and compliance review before any code runs. Map every regulation the agent might touch. Under agency law, your company owns every decision the agent makes, so define authority limits now and draft fallback procedures that keep you within regulatory boundaries.

Second, build kill switches and monitoring from day one. Create real-time dashboards, automated alerts, and manual shutdown paths. These aren't optional safety features but essential infrastructure that prevents runaway processes and compliance violations.

Third, isolate completely in a sandbox environment. Use synthetic data and edge cases to observe behavior when inputs get messy. Require human approval for every external action, document all exceptions and delays, and track hidden spending on compute, prompt tuning, and oversight. If benefits don't clearly outweigh the full cost iceberg within six weeks, shut it down and revisit when the technology matures.

The intelligence amplification advantage

Intelligence amplification keeps you in control while AI handles repetitive work. The intelligence layer processes data at incredible speed, spots patterns, and flags what matters most, but you retain decision authority. Since decisions stay human, legal exposure drops and explaining choices to stakeholders or regulators becomes straightforward.

Auto Summarize condenses long email threads instantly, helping you grasp context quickly without losing control. Instant Reply cuts through information overload by suggesting responses you can edit or approve. AI drafts complete replies from just a few words while maintaining your voice and tone. Since professionals spend more than half of every workday in email, messaging, and calendar, these tools target where augmentation delivers the most value.

Team collaboration also improves without autonomous risk. Shared Conversations and Team Comments enable rapid internal decision-making directly within email, eliminating the need to toggle between Slack, docs, or CRMs. Snippets let you quickly insert prewritten templates and share effective replies across teams to scale best practices while maintaining consistency.

Companies like Rilla, Brex, Hebbia, and Go Nimbly have discovered this augmentation approach delivers measurable results without the downsides of full autonomy. Superhuman customers using AI features save 37% more time than those who don't. Teams save four hours per person every week and respond 12 hours faster. Top performers are already 14% more productive through augmentation that amplifies their capabilities rather than replacing their judgment.

Why autonomous strategies inevitably collapse

Autonomous AI fails when technical uncertainty meets corporate reality. The technology hallucinates, forgets context, and obscures its reasoning process. Explainability tools exist but remain brittle and unreliable. When you can't attribute responsibility or explain decisions, agency law still assigns liability to your company. Courts impose objective standards of care on risky agents, turning every unexplained misstep into potential litigation.

Business infrastructure compounds these fundamental problems. Legacy systems and siloed data choke autonomy, forcing expensive integration projects that rarely deliver promised returns. Once you scale beyond proof of concept, hidden expenses in compute, orchestration, and human oversight multiply beyond initial projections. The predictable fallout includes compliance violations, audit gaps, and customer backlash that erase any projected ROI while damaging brand reputation.

Making the strategic choice

Focus on measurable business outcomes rather than technology promises when evaluating AI strategies. Industry-leading companies are 3x more likely to report significant productivity gains from AI, but their success comes from thoughtful implementation focused on augmentation, not autonomous agents. Companies using the right AI tools report saving at least one full workday every single week through intelligence amplification.

The evidence consistently points toward augmentation over autonomy. While 66% expect at least a 3x increase in productivity over the next five years, achieving these gains requires maintaining human oversight while AI amplifies capabilities. This approach creates sustainable competitive advantage without catastrophic downside risk.

The most successful organizations implement AI with clear strategy, questioning vendor promises about autonomy and choosing to amplify human intelligence instead. Tools like Superhuman demonstrate how AI should enhance rather than replace human judgment. By keeping control, reducing risk, and capturing AI's benefits responsibly, you position your organization for genuine productivity gains without betting the company on unproven autonomous systems.