Your enterprise is running another AI maturity assessment. Six months of workshops, frameworks, and scorecards. Meanwhile, your competitor just shipped their third AI feature this month.

While you're checking boxes on maturity models, they're checking growth metrics. With 87% believing AI is necessary for competitive advantage, the question isn't how mature your AI adoption is. It's how fast you're moving.

The gap between perfect and profitable

When a new AI opportunity appears, enterprises reach for governance workbooks. Unicorns push code to production. The difference defines who wins markets.

Enterprises love their five-level maturity matrices. They progress methodically from "awareness" to "transformational," releasing updates quarterly or annually. Success means audit compliance and high maturity scores. Every decision filters through committees, risk assessments, and documentation requirements.

Unicorns operate differently. They launch, learn, and scale within single sprints. Success means revenue lift in the same quarter. While enterprises optimize for "multi-moment experiences," unicorns ship AI tools that save teams 4 hours weekly and respond in milliseconds.

This isn't about being reckless. It's about recognizing that perfect maturity scores don't pay bills. Customer adoption does. Revenue growth does. Market leadership does. The companies winning with AI aren't the ones with the best frameworks. They're the ones shipping features customers actually use.

Where maturity models came from and why they fail today

Traditional maturity models emerged when technology moved slowly and predictably. They borrowed concepts from manufacturing quality control, assuming linear progression through defined stages made sense for every industry.

These frameworks worked when product cycles spanned years. You could afford six-month planning phases when competitors moved at the same glacial pace. Documentation mattered more than deployment because deployment happened so rarely.

But AI broke those assumptions. Product cycles compressed from years to weeks. What worked for enterprise resource planning fails for machine learning. Models prioritize stability over speed, assuming resources most startups lack like dedicated data teams, cross-functional councils, and heavyweight governance structures.

The mismatch becomes obvious when you apply these frameworks to Series A through C companies. They need to ship features fast to survive, not climb methodical maturity ladders. Every month spent on assessments is a month competitors spend capturing market share.

The velocity paradox that breaks traditional thinking

Teams scoring low on AI maturity assessments often dominate their markets. While consultants label certain countries as "AI pioneers," the fastest-growing companies rarely appear in readiness reports. They're too busy building to fill out surveys.

This paradox reveals what traditional models miss. They measure process compliance, not business impact. They count governance committees, not customer satisfaction. They reward documentation over working software that customers love.

Real AI success looks different. It's shipping new features every sprint. It's measuring impact daily instead of quarterly. It's iterating with live customers instead of theoretical frameworks. Companies following this path see 3x productivity gains while others debate maturity levels.

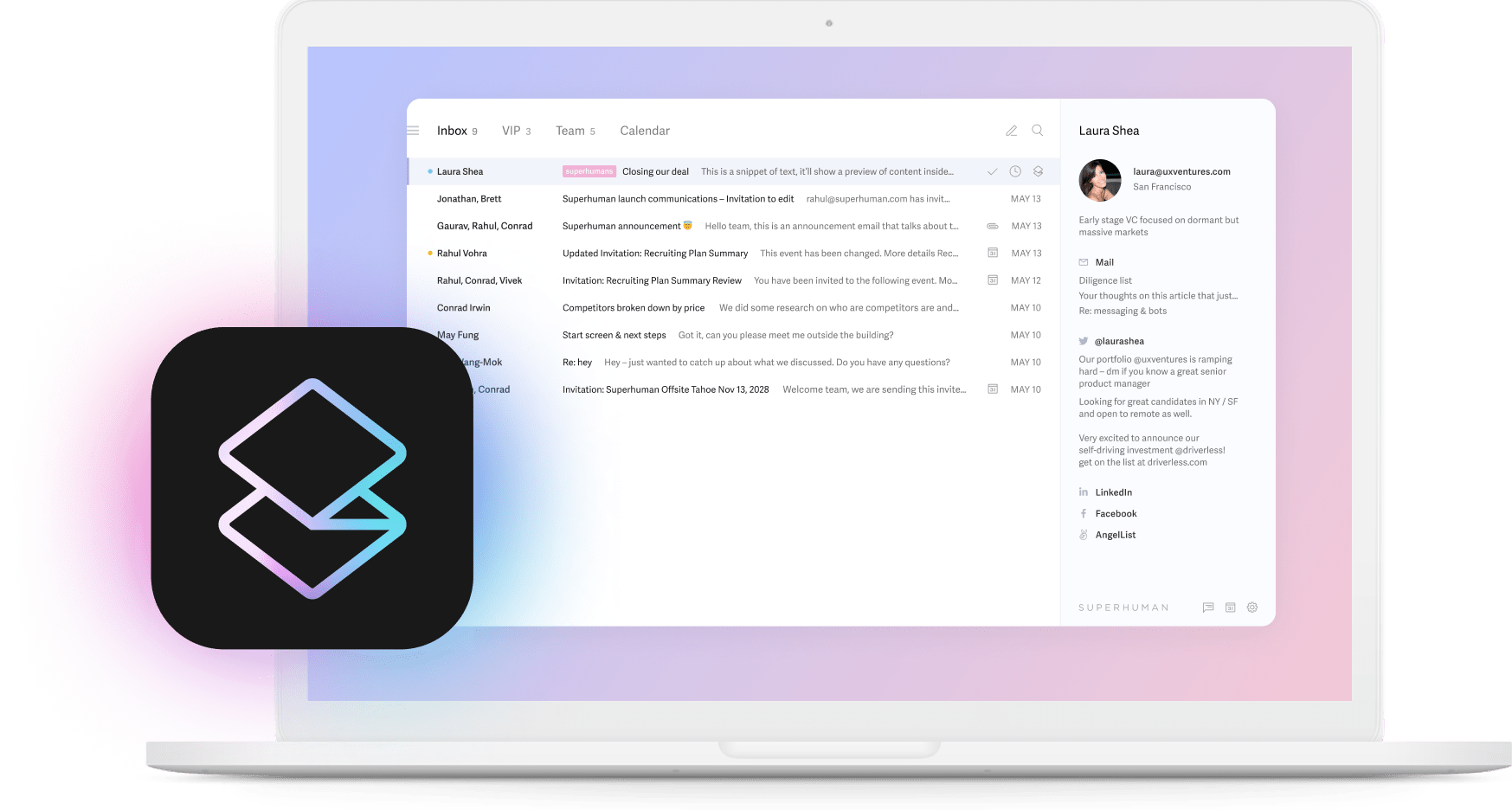

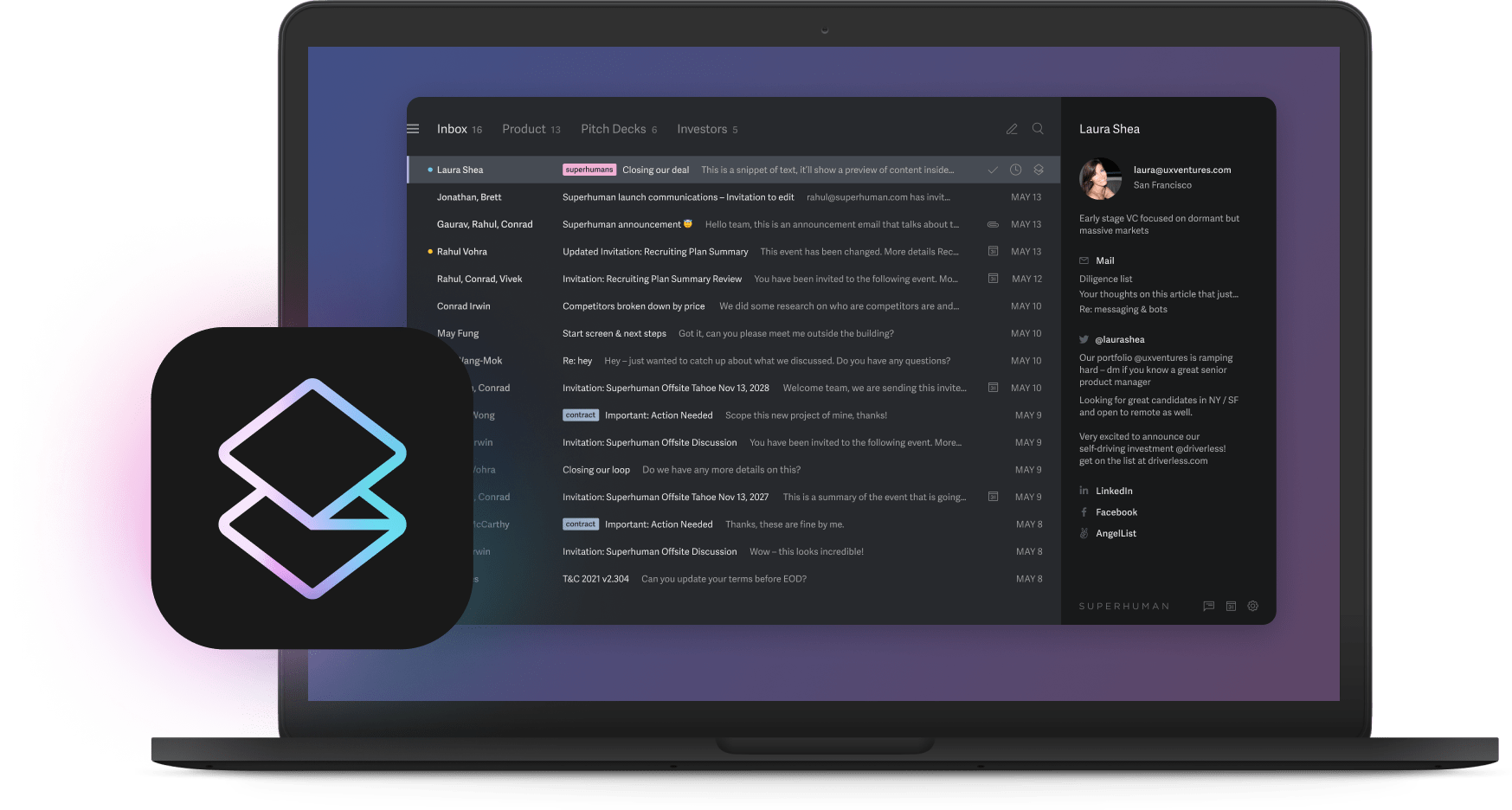

The best AI tools demonstrate this principle. Superhuman's Split Inbox automatically separates important emails from everything else, while Instant Reply drafts responses in your voice. These features went from concept to customer inboxes in weeks, not years. Every action completes in under 100 milliseconds because speed matters more than scorecards.

What unicorns do instead of maturity models

Unicorns treat AI as a continuous sprint rather than a certification process. They build and govern simultaneously, jumping to production when metrics prove value. Three habits define their approach.

First, they skip stages ruthlessly. When a model hits target metrics, it moves to live testing immediately. No waiting for the next review cycle. No climbing every rung of the maturity ladder. If it works, it ships.

Second, they obsess over real-time feedback. Dashboards track usage, errors, and revenue impact continuously. Decisions happen daily based on actual customer behavior, not quarterly based on theoretical frameworks. This tight feedback loop accelerates learning and improvement.

Third, they build competitive moats through proprietary data. By training models on unique signals like customer click patterns or domain-specific text, they create AI that competitors can't replicate. This focus on differentiation beats any maturity score.

A typical unicorn AI rollout happens in 90 days. Week one defines the single workflow with maximum customer impact. Week three ships an MVP to early adopters. Week six refines accuracy based on usage data. Week twelve sees full release with measurable revenue impact. The next sprint already started before the first one finished.

The hidden costs of chasing maturity

Traditional AI maturity creates five expensive problems for high-growth companies, each one compounding the others.

Opportunity cost hits first and hardest. Every month spent aligning to frameworks is a month not shipping features. Competitors launch multiple innovations while you're still documenting governance procedures. Lost positioning translates directly to lost revenue.

Talent attrition follows quickly. Builders and innovators thrive on shipping, not paperwork. The best engineers leave for companies that let them create rather than comply. Your remaining team spends more energy on process than product.

Innovation theater replaces real progress. Teams create impressive slides about AI strategy while actual implementation stalls. Stakeholders see presentations instead of working software. Everyone feels busy but nothing ships.

Metrics mislead when they measure process over outcomes. High maturity scores mask low customer adoption. Compliance checkmarks hide competitive disadvantages. You optimize what you measure, and traditional models measure the wrong things.

Capital flows to compliance infrastructure instead of customer value. Budget goes to governance tools rather than product features. Resources support frameworks rather than revenue. Money that should accelerate growth instead maintains process.

The AI velocity playbook for growth

Forget sequential frameworks. You need a system that prizes shipping speed, customer feedback, and business impact above process perfection.

Start by tracking what matters. Every Friday, measure three numbers that actually predict success. How long do features take from idea to production? How many experiments did you complete? What incremental revenue did AI create? Short cycles, high experiment counts, and rising revenue signal real momentum.

Pick one workflow and transform it completely before touching anything else. Companies implementing focused AI improvements typically see 15% to 25% revenue gains. Don't spread effort across multiple initiatives. Depth beats breadth when building AI capabilities.

Run tight learn-build-measure loops with real customers. Ship to small user groups, gather feedback immediately, then iterate. Develop features and governance in parallel rather than sequentially. Measure business impact weekly, not quarterly. Kill failing experiments fast and double down on winners.

This velocity-first approach powers the fastest-growing companies. They ship features in days that save customers hours weekly. They respond to market changes in sprints, not years. They build competitive advantages through speed, not scorecards.

Choose velocity over maturity

The companies winning with AI aren't the most mature. They're the fastest. While enterprises climb methodical maturity ladders, unicorns sprint to market leadership.

Your choice is simple. Spend months on assessments that impress consultants, or spend weeks shipping features that delight customers. Chase high maturity scores that mean nothing to users, or chase revenue growth that means everything to investors.

The path forward starts today. Pick your highest-impact workflow. Set a 30-day ship target. Measure results, not process. Let competitors worry about maturity models while you worry about market share.

Speed beats perfection in AI adoption. Just ask teams using tools like Superhuman, where AI features like Auto Summarize condense lengthy email threads instantly. These teams save 4 hours weekly because they chose tools that ship fast and deliver value, not ones with perfect maturity scores. Your customers care about results, not frameworks. Give them what they want.