Nearly 87% of professionals believe AI is necessary for competitive advantage. Yet most chase fancy algorithms while their pilots fail. The winners do the opposite. They pick one business metric, prove value in 30 days, then scale.

This playbook shows you their exact approach. You'll get a five-question readiness test, a proven 30-day pilot framework, and KPI templates that track real revenue impact. No six-month roadmaps. No transformation theater. Just results.

With 66% of professionals expecting at least a 3x productivity increase from AI within five years, the companies turning everyday data into competitive advantages will pull ahead. The rest will still be arguing about algorithms.

Why "AI-first" fails and what really drives scale

Here's what happens when you start with models before mission. Your AI budget vanishes. Teams build impressive demos. Six months later, nobody can explain how it makes money.

This pattern is predictable because boards read about AI valuations and panic. Teams scramble to ship something that looks like AI, but nobody attached a business problem or KPI to these pilots. Without clear goals, experiments fragment. Teams duplicate work. Data silos multiply. The whole initiative collapses under its own complexity.

The companies pulling ahead do the opposite. Industry-leading companies are 3x more likely to have experienced significant productivity gains from AI because they build on three pillars. First, organizational readiness through executive sponsorship, cross-functional teams, and change management that addresses job security fears. Second, data maturity with clean, accessible, well-governed data that beats fancy algorithms every time. Third, incremental pilots using short loops that prove one metric before writing another line of code.

These pillars guide where you point AI first. Find problems with clear, measurable payoffs like predictive inventory that prevents stockouts, automated support that cuts response times, dynamic pricing that improves margins, or talent acquisition that reduces time-to-hire. Pick one idea, tie it to one business objective, estimate the upside, run a four-week pilot. If the metric improves, scale. If not, pivot or kill it.

The 5-minute AI readiness litmus test

Save yourself days of debate. Answer these five yes-or-no questions honestly, and you'll know whether to launch a pilot or fix your foundations first.

Start by checking your digital maturity. Are core systems cloud-based, API-friendly, and regularly audited for data quality? Without this foundation, you can't evaluate integration capabilities, which is your next checkpoint. Can new AI apps plug into your tech stack without months of custom plumbing? If not, even minor data fixes get stuck behind engineering backlogs, which kills momentum before you start.

Once you confirm technical readiness, examine business impact potential. Will your first use case lift revenue or cut costs this quarter? This matters because you need executive attention, which only comes from projects that move the needle. Then review your compliance and ethics framework. Do you have written guidelines for privacy, bias monitoring, and audit trails? Without these, one regulatory surprise can end your entire program. Finally, identify a quick win where a simple model using existing data can prove value in 30 days.

Your score determines your path forward. Four or five "yes" answers mean you're ready to pilot. Three or fewer means shore up the gaps first. This threshold matters because missing capabilities in process or people derail programs far more often than faulty algorithms. Print this checklist, run through it with your executive team this week, and either book your 30-day pilot kickoff or assign owners to close gaps and retest next month.

The 30-day pilot loop: from idea to proven KPI

Forget six-month AI roadmaps. You can prove value or failure in four weeks, but only if you follow this exact sequence.

Week 1: Pick one metric Everything hinges on choosing the single outcome that signals success. Hours saved per support rep. Revenue per sales call. Error rate in invoice processing. Document today's baseline and your target lift, because without this clarity, scope creep will kill your pilot before week two.

Week 2: Build the MVP With your metric locked, assemble a lean strike team to create a proof-of-concept using data you already have. Keep dependencies minimal with just a lightweight model, secure access to relevant data, and a sandbox environment cleared by compliance. This constraint forces focus and prevents the feature bloat that dooms most pilots.

Week 3: Shadow-launch Your simple MVP now meets reality. Release to a small group of select customers or an internal team. Collect quantitative results and qualitative feedback while tracking usage friction, data issues, and edge cases. This controlled exposure reveals problems without risking revenue operations.

Week 4: Scale, iterate, or stop The data from week three drives a simple decision. Did the metric improve 20% or more while the system remained stable? Scale it. Did the metric improve but reliability or adoption issues persist? Run another iteration. Is the metric flat or negative? Kill the project and move to the next idea.

Three predictable pitfalls can derail this process. Scope creep destroys focus, so lock your feature set after week one. Data privacy gaps create legal exposure, so run privacy impact assessments before week two ends. Insufficient testing breaks production systems, so dedicate 30% of week three to edge-case validation. Share pilot outcomes within 48 hours of completion using a concise format that highlights the metric change, what worked, what failed, and recommended next steps. This transparency builds trust for your next pilot.

Architecting for scale: strike team vs. center of excellence

Startup speed demands an AI staffing model that matches your product velocity. You've got three options, but only one delivers results fast enough for hypergrowth.

Building everything in-house seems logical. You get control and deep company knowledge while protecting intellectual property and keeping models aligned with strategy. But this approach fails at speed. Tech giants compete fiercely for AI talent, posting thousands of roles annually, which means you'll wait months for hires while competitors ship products.

External partnerships solve the speed problem by placing qualified talent in weeks and providing immediate access to niche skills. Yet this creates a different trap. Long-term reliance means losing critical knowledge, and cultural friction emerges when sensitive data or core algorithms leave the building.

The Strike Team model delivers both speed and control. Keep a lean, cross-functional pod of four to six people in-house. Start with a product lead who owns business impact and reports to the COO or CTO. Add two or three ML engineers focused on rapid prototyping, a data engineer responsible for pipelines and quality, and a domain expert from the revenue-owning function. This unit operates in one-month sprints, shipping to production without handoffs and tapping external specialists only for short bursts.

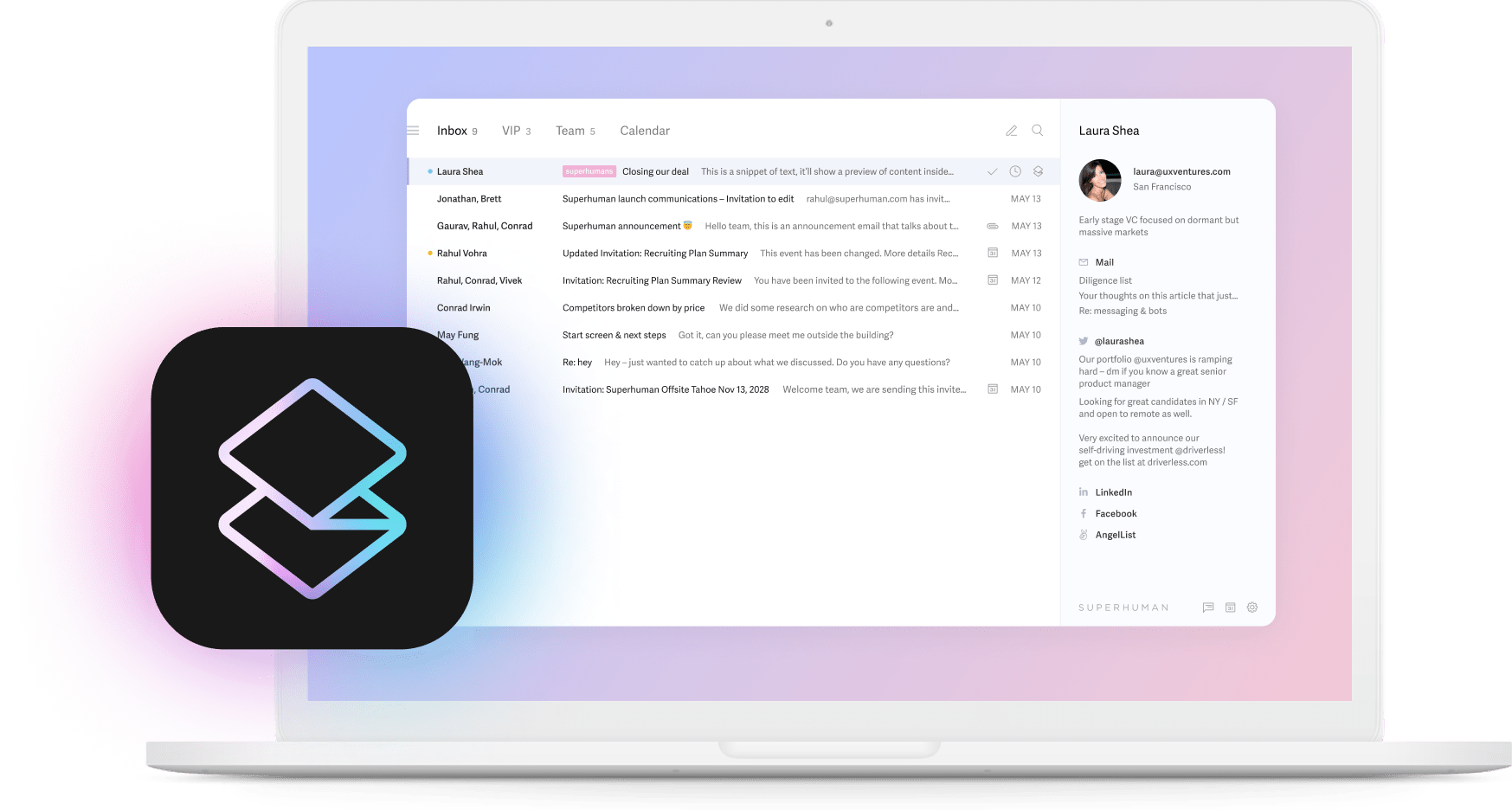

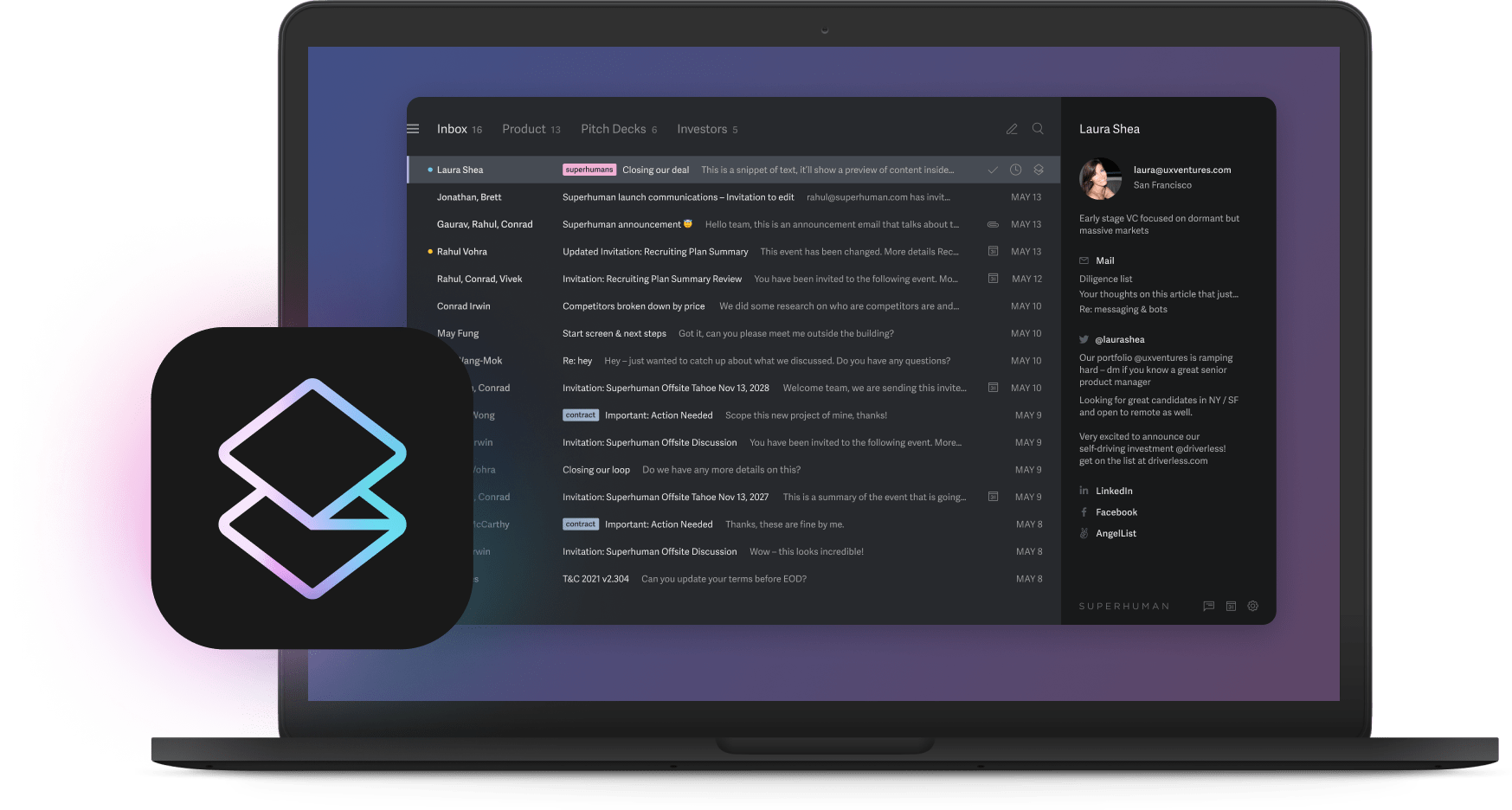

Success metrics prove the model works. Time to first model in production drops to under 30 days. Iteration cycles shrink from weeks to hours. Revenue or cost impact per team member becomes your north star. Teams using this model see significant efficiency gains, with professionals at leading companies sending and receiving 72% more emails per hour when using AI-native tools.

The decision about what stays internal becomes simple. Does the project touch strategic data, differentiate your product, or require constant iteration? Keep it with the Strike Team. Is the work specialized, temporary, or requiring rare skills? Hire externally for that sprint only.

Data, not models: turning exhaust data into a 10× advantage

Own the data, win the game. While algorithms get updated weekly by thousands of researchers, the unique traces your company generates every day can lock out competitors for years.

Most companies miss this because they obsess over the latest model architecture while letting digital exhaust rot in log files. Think about what you're ignoring. Support tickets reveal customer pain patterns. Clickstreams show user intent. Supply chain scans predict bottlenecks. Sensor readings prevent failures. This goldmine compounds daily, yet teams chase algorithms anyone can copy.

Monetizing this exhaust requires a three-step foundation. First, inventory every system producing data and tag each source with volume, refresh rate, and sensitivity level. You'll discover surprising assets like call center recordings, draft documents, and trial signup patterns that competitors can't access. Next, clean and label what matters by defining the fields you need, adding plain-language labels, and removing noise. Set up automated checks and nightly deduplication to prevent the integration headaches that derail over half of AI rollouts. Finally, govern with lightweight controls through role-based access, documented data lineage, and periodic audits. Modern tools embed these practices, making compliance nearly invisible.

With solid foundations, exhaust data transforms into competitive moats. Retail teams feed purchase signals into pricing models that adjust faster than competitors can react. SaaS companies mine click paths to predict and prevent churn before customers even consider leaving. Fashion brands read store sales and social buzz to design inventory with minimal guesswork and maximum margin.

Fast-growing companies face unique challenges with messy integrations, shifting schemas, and duplicate fields everywhere. The solution is attacking cleanup by business impact. Revenue-critical tables come first, vanity metrics never get touched. Keep a running "data debt" list and pay it down sprint by sprint. Build privacy habits from day one, like defaulting to BCC when sharing interim results. These practices keep sensitive data secure while you turn everyday operations into an asset competitors can't replicate.

Metrics that matter: proving ROI to the board

The board wants to know one thing. Does AI make or save money? Everything else is commentary, so build your story on four pillars that answer this question definitively.

Start with revenue uplift because nothing speaks louder than growth. Track how analytics improve sales tactics, personalization drives larger purchases, and dynamic recommendations increase conversion rates. Clear attribution models help isolate AI's contribution from general business growth, giving you credible numbers that CFOs trust.

Cost avoidance provides the other half of the financial equation. Reduced labor costs, fewer errors, and streamlined workflows translate directly to bottom-line savings that accumulate quarter over quarter. Add risk reduction metrics showing how AI prevents manual mistakes and regulatory breaches, protecting against penalties that could wipe out years of gains. Complete the picture with productivity gains that count time savings and output increases, demonstrating how teams accomplish more meaningful work when AI eliminates redundant tasks.

These metrics only matter if they connect to ongoing business outcomes. Link every metric to KPI monitoring that executives already track. Establish baselines before deploying anything so you can show clear before-and-after comparisons. Build an AI ROI dashboard that fits on one page and updates monthly, giving executives the at-a-glance view they need for board meetings.

Tailor your presentation to your audience because each stakeholder cares about different impacts. CFOs want the financial metrics. Department heads need operational efficiency proof. CEOs care about strategic advantages over competitors. Include intangible benefits like improved customer satisfaction and faster innovation cycles, but lead with the hard numbers. This complete picture of AI business transformation value turns skeptics into champions.

Pitfalls and troubleshooting guide

Even well-funded rollouts hit the same walls. Recognize them early and you'll maintain momentum while others stall.

Tech debt balloons when teams rush features without documentation, leaving you untangling fragile code six months later instead of shipping new capabilities. This happens because pressure for quick wins overrides engineering discipline. Institute a "two-sprint freeze" forcing infrastructure hardening and documentation before any scaling decision, preventing the accumulation that eventually grinds progress to a halt.

Model drift follows a predictable pattern. Data patterns change, predictions degrade, executives wonder where the ROI went, and trust erodes. The solution is wiring drift alerts into your deployment pipeline from day one and scheduling monthly "model health" reviews. This early warning system catches problems before they impact business metrics.

Regulatory blind spots create the most dramatic failures because one privacy violation can tank your entire program overnight. Bake compliance into your development process with automated policy checks that run in parallel, not as afterthoughts. Companies using continuous compliance tools push models live 30% faster while reducing risk.

Talent churn hits AI teams hardest because specialists have endless options, especially top performers who save 14% more time per week compared to lower performers. Combat this by publishing transparent growth ladders and pairing every hire with a senior mentor who can accelerate their development.

Vanity metrics waste time and erode credibility. Model accuracy sounds impressive in presentations but means nothing if revenue stays flat. Anchor every sprint to business KPIs and review progress weekly with finance to maintain focus on outcomes that matter.

Prevention beats cure, so run a 30-minute pre-mortem before each pilot asking what could kill this project six months from now. Turn the top three risks into backlog items you address immediately. When problems do surface, use a simple decision tree. Is it purely technical? Allocate a fix window. Does it impact customers or compliance? Trigger your crisis plan immediately. Does the KPI still beat baseline? Continue while fixing. Document every incident in a learning log that turns failures into competitive advantages for your next project.

Conclusion

The companies dominating the next decade won't have the fanciest algorithms. They'll master turning AI potential into measurable business value.

Start with the readiness assessment. If you score high, launch your first 30-day pilot this month. If not, fix the gaps and retest in 30 days.

Speed beats perfection in AI rollouts. Your competitors are already experimenting. The question isn't whether AI will reshape your industry. The question is whether you'll lead that transformation or watch from the sidelines.