Your AI implementation roadmap is already wrong. Right now, today, as you read this. State-of-the-art benchmarks flip every three months. Model costs drop dramatically every year. Open-source releases now match or beat the expensive tools you budgeted for.

That careful 12-month plan you presented last quarter? The models it references are already outdated. The pricing assumptions are off by multiples. The competitive industry looks nothing like your neat PowerPoint slides.

The myth of the 12-month AI roadmap

Every enterprise wants a 12-month AI roadmap, and it makes sense. You need structure, investor confidence, budget approvals, and hiring targets. Plus, 87% believe AI is necessary to maintain a competitive advantage. The problem is AI doesn't care about your fiscal year.

Traditional software development taught us that planning works. Requirements stayed stable for months. Technologies evolved slowly. A year-long plan made sense because the ground beneath your feet stayed still. But AI broke that contract. What counts as cutting-edge today becomes legacy code by next quarter. Frameworks disappear overnight while new ones emerge before you finish evaluating the old ones. Your carefully researched vendor comparisons become historical curiosities before implementation even begins.

This mismatch hits venture-backed companies hardest. Series A through C firms live and die by quarterly metrics, yet they're trapped in annual planning cycles designed for a slower world. Investors demand progress updates every three months while roadmaps assume nothing changes for twelve. The result is predictable: competitors who abandoned the annual planning theater grab market share while you're still waiting for committee approval. In AI, hesitation costs more than any model license ever could.

Why AI plans age in dog years

Your static roadmap ages like a dog. One real year equals seven roadmap years. Four forces drive this accelerated aging, and understanding them helps you see why traditional planning fails.

First, models improve faster than you can update PowerPoints. Those benchmarks you researched last quarter? They're history. The model you picked after weeks of evaluation just got beaten by something released yesterday. Your technical specifications become fiction before implementation starts.

Second, prices fall through the floor while you're still negotiating contracts. Training costs dropped dramatically over four years. The build-versus-buy analysis you did six months ago assumes prices ten times higher than today's reality. Budget meetings become comedy shows where everyone pretends the numbers make sense.

Third, open source eats everything. Remember when you needed enterprise licenses for decent AI? Now developers download better models for free. Your procurement cycle takes longer than the entire lifecycle of the tools you're trying to buy. GitHub releases don't wait for purchase orders.

Fourth, regulations shift weekly. You hold back features waiting for compliance clarity that never arrives. Meanwhile, competitors ship iterative solutions and adjust as rules evolve. Perfect compliance guidance is like perfect code. Both are myths that stop you from shipping.

Four red flags your roadmap is already outdated

Want to know if your roadmap needs emergency surgery? Check for these symptoms that compound each other into strategic paralysis.

Your model assumptions froze in time. Does your plan still mention GPT-3.5 as the gold standard? Do your comparisons predate Claude 3.5 or GPT-4o? You're planning yesterday's solutions for tomorrow's problems. Modern teams review model options every ninety days using fresh benchmarks because what seemed impossible last quarter is now table stakes.

Those frozen assumptions lead directly to budget disasters. Line items showing 2023 costs mean you're planning to waste money on a massive scale. AI pricing drops monthly, turning your carefully negotiated enterprise deals into expensive mistakes. That expensive API you budgeted for might cost one-tenth that amount today. When you refresh your economics quarterly, you discover your entire financial model needs rebuilding.

The financial mess reveals a deeper problem in your hiring plans. Still looking for just ML engineers? Your job posts read like 2022 because you're solving 2022 problems. Modern AI teams need prompt engineers, AI product managers, and people who understand these tools at a practical level. Yesterday's job descriptions attract yesterday's talent, leaving you with a team equipped for the wrong battle.

All these issues culminate in the final red flag: waiting for perfect regulations. Holding features until the EU AI Act gets carved in stone means watching competitors lap you while you study compliance documents. Smart teams ship with reasonable compliance and adjust weekly. They understand that perfection paralysis kills more AI projects than bad models do. By the time regulations finalize, the entire landscape will have shifted again.

An adaptive AI delivery model

Forget the annual roadmap. You need a system that learns as fast as the technology changes. This works best when you combine short AI sprints, a reusable capability platform, and a portfolio approach that keeps your options open.

AI sprints

Run 4 to 6-week cycles. Pick one capability, build it, ship it, and learn from real users. Then do it again. Each sprint starts with a hypothesis and ends with data. Forget multi-quarter planning sessions. Skip the endless strategy documents.

Teams using this approach move faster than those stuck in annual planning cycles. Why? Because they test assumptions weekly instead of discovering they were wrong after six months. Real feedback beats theoretical planning every time.

Capability platform

Don't rebuild everything when better models appear. Create a platform where models plug in like Lego blocks. Strong data pipelines feed any model. Flexible tooling works with whatever AI comes next. Testing frameworks let you swap models without breaking production.

Think of models as replaceable parts, not foundations. When next month's breakthrough arrives, you switch it in. No architectural overhauls. No starting from scratch. Just better results with less work.

Portfolio approach

Spread your bets like a smart investor. Put 70% into proven techniques that improve metrics today. Allocate 20% to medium-term upgrades with clear potential. Reserve 10% for moonshots that probably won't work but could change everything if they do.

This mix handles market shocks gracefully. Costs collapse? Your experiments become production features. New model destroys your assumptions? Your moonshot budget already explored it. Treat each quarter as a rebalancing opportunity.

How high-growth teams put adaptive AI into practice

Real companies already ditched their roadmaps. Their results tell the story better than any theory could.

A Series B fintech tore up their 2024 roadmap halfway through the year. They swapped expensive API calls for open-source models. Inference costs dropped 40%. Feature velocity jumped 30%. But the real win came from their new planning cycle. They forecast monthly instead of annually.

"We used to plan AI features a year out," their CTO explained. "Now we ship, measure, and pivot within weeks. Open source cut our time-to-production from months to weeks."

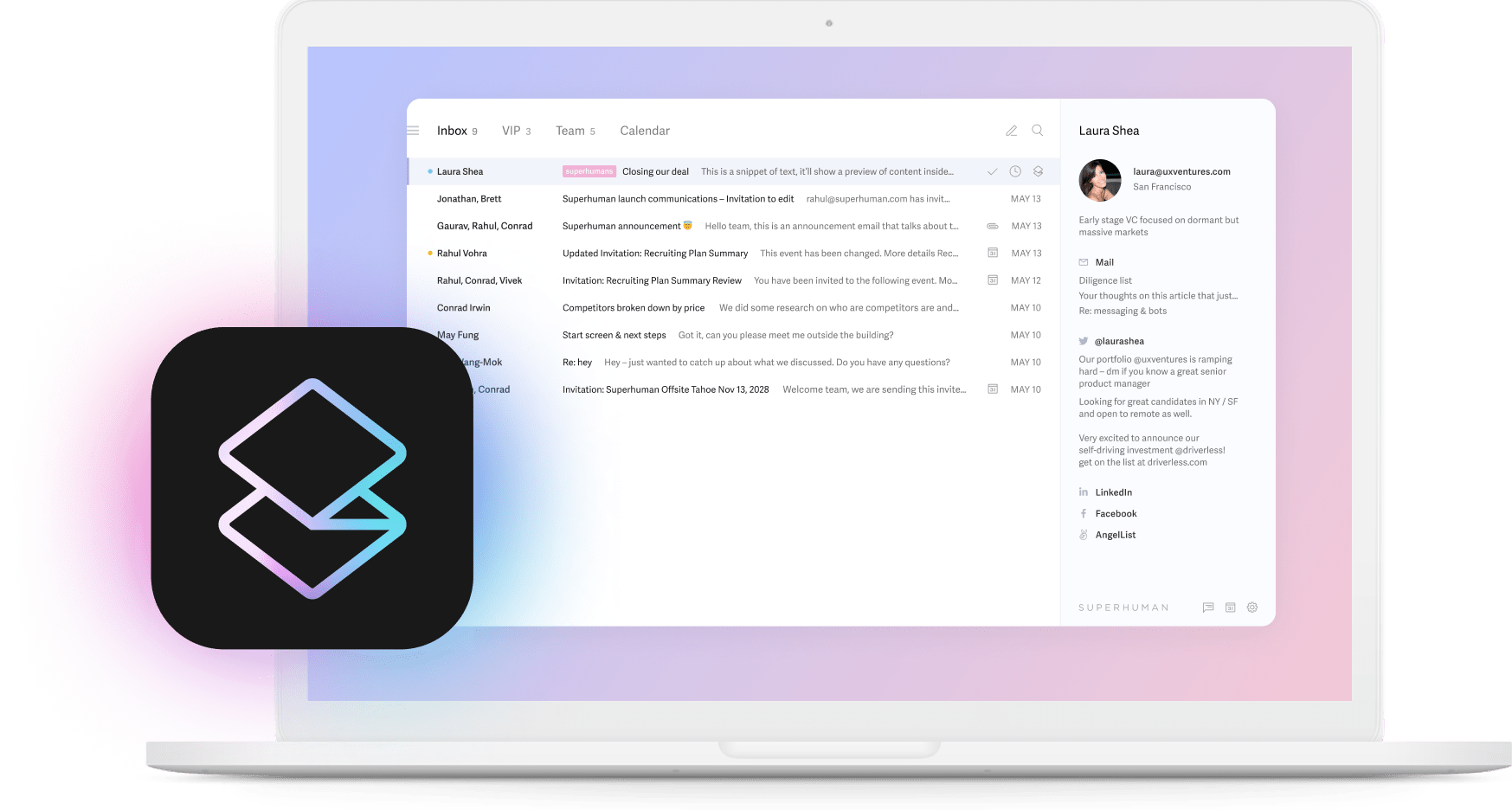

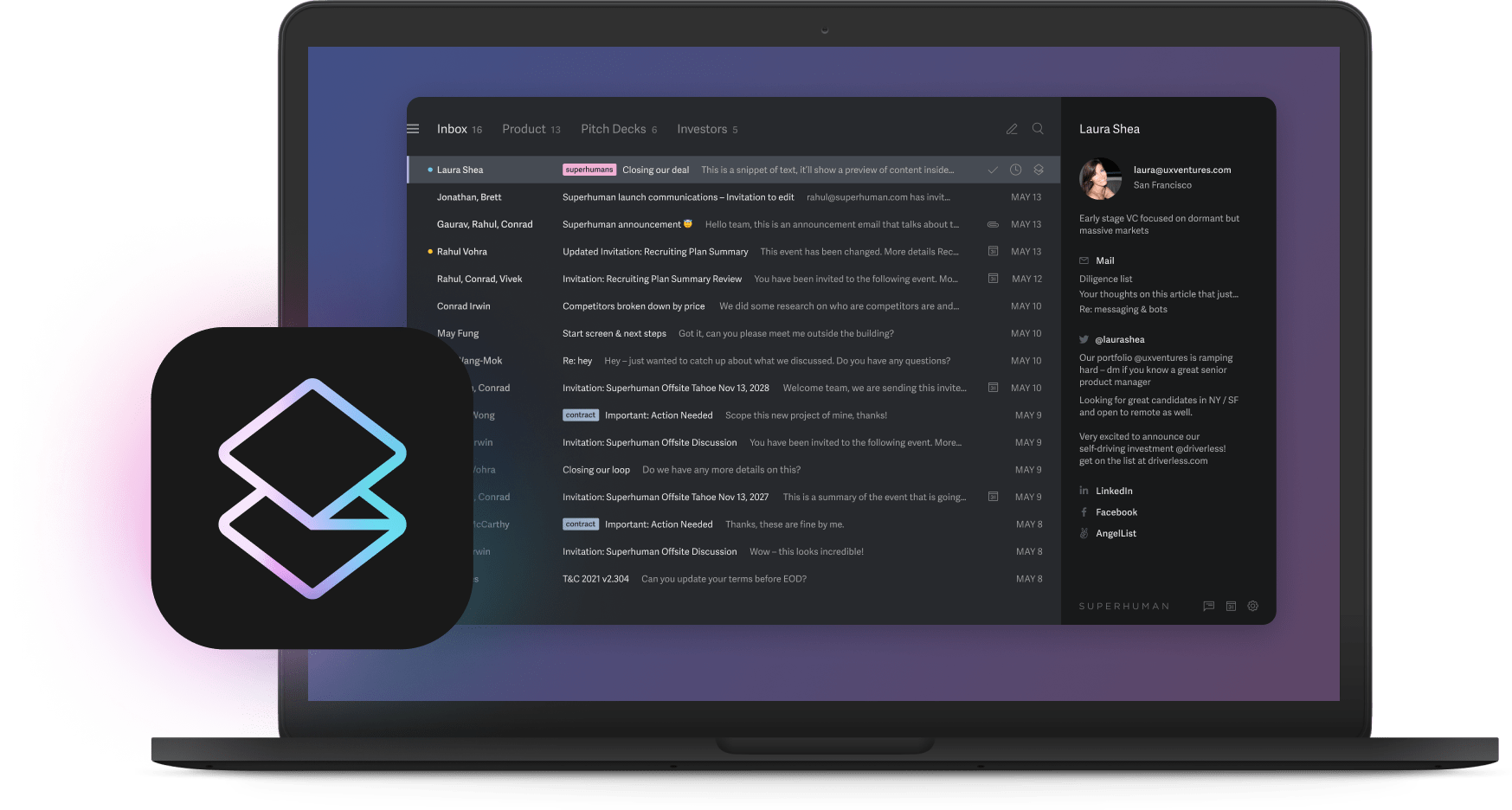

A productivity SaaS company went even further. They adopted weekly AI sprints. Eight weeks later, they'd shipped instant reply suggestions and auto-summarization. NPS scores climbed 12 points. Customers saved 4 hours weekly.

"Speed became our roadmap," their product lead said. "We stopped planning features and started shipping experiments. Every week taught us something that would have taken months to discover in our old process."

Notice the pattern? Both teams learned the same lesson. In AI development, rapid iteration beats perfect planning. When models evolve monthly and costs drop quarterly, your advantage comes from adaptation speed, not prediction accuracy.

Common misconceptions that stall progress

Wrong beliefs slow teams more than slow models. Clear these mental blocks and watch your velocity increase.

You think you need perfect data to start. Wrong. Open-source fine-tuning handles messy data better than you'd expect. Yet 30% of GenAI pilots stall because teams wait for pristine datasets. Ship with what you have. Clean as you learn. Perfect data is a luxury you can't afford.

You assume vendor lock-in traps you forever. Old thinking. Open-source models and plummeting compute costs changed the game. Switching models takes weeks, not quarters. Build on modular foundations. Switch providers when better options appear. Lock-in only exists if you build for it.

You believe you need a five-year vision before starting. The real blockers are much simpler. Your team needs new skills, processes need updating, and compliance needs rethinking. Short AI sprints expose these gaps immediately. Vision without execution is just expensive daydreaming.

Moving forward with adaptive AI

The AI landscape rewards speed over perfection. While competitors debate roadmaps, adaptive teams ship and learn. In a world where capabilities and costs change monthly, moving fast beats planning perfectly.

This isn't just theory anymore. Companies embracing adaptive methods see tangible results: they respond to market changes in weeks instead of quarters, ship features customers love instead of features committees approved, and avoid expensive mistakes by learning cheap lessons early. The gap between adaptive and traditional teams widens daily, creating a competitive moat that planning alone can never bridge.

The path forward is refreshingly simple. Start with small experiments and measure everything. Adjust based on data, not opinions. Treat planning as continuous improvement, not annual theater. Just as Superhuman helps teams save 4 hours weekly and handle twice as many emails, adaptive AI planning helps you ship twice as fast with half the waste.

The future belongs to teams matching AI's pace of change. Your roadmap should evolve as quickly as the technology it implements. With 66% expecting at least 3x productivity gains from AI within five years, the question isn't whether to abandon your 12-month roadmap. The question is how fast you can build something better.