You know that sinking feeling when you realize your AI project is going nowhere?

Most AI pilots burn through budgets and careers with nothing to show for it. Meanwhile, boards demand AI wins, customers expect innovation, and investors reward transformation stories.

Every failed project costs more than capital because it erodes trust, damages reputations, and creates organizational scar tissue that makes the next attempt even harder. This AI transformation playbook reveals the hidden failures that kill projects before anyone writes a line of code, giving you the insurance policy your balance sheet and leadership credibility need.

Why AI transformations fail before they start

Organizations launch AI initiatives with fanfare, hire expensive talent, and promise revolutionary results. Eighteen months later, they quietly shut down the project and hope nobody notices. This pattern repeats because companies make the same fundamental error: they focus on technology instead of organizational readiness.

The failure cascade starts with data foundations. Companies that spread resources across six or more AI projects typically fail, while those focusing on three or four deliver measurable returns. Why? Because focused companies ensure data quality before scaling, while scattered efforts mean nobody maintains the data hygiene that models require. When market conditions shift, these models lose accuracy fast because they're trained on outdated patterns, triggering a domino effect of organizational dysfunction.

Product teams chase growth metrics while risk management lacks veto authority, creating political battlegrounds where success becomes impossible. New hires can't spot problems because they lack context, while existing staff resist because they don't understand the technology. But the fatal flaw emerges when organizations keep pouring resources into failing projects because nobody defined what failure looks like. Momentum replaces judgment until millions are gone and careers damaged.

Understanding this pattern helps you build safeguards before you start, not after you've failed.

The 15-minute test that could save your project

Your AI project will probably fail because you haven't answered five basic questions that determine success or disaster. This test takes 15 minutes and reveals exactly where your project will break down, allowing you to fix problems before they explode.

The AI reality check:

- Do all your executives agree on the top three AI projects for next year? YES/NO

- Can you get clean, useful data for your pilot right now? YES/NO

- Does one person own the budget and success metrics? YES/NO

- Do you have the right people on staff today? YES/NO

- Have you written down your ethics rules and legal requirements? YES/NO

Count your YES answers. Four or five means proceed. Less means stop and fix the gaps. Here's why each question predicts your fate.

Executive alignment prevents competing agendas from tearing projects apart because when your CFO wants cost reduction while your CMO demands innovation, the project becomes a casualty of internal politics. Data readiness can't be faked or postponed since hoping to clean data "during implementation" guarantees failure. Single ownership creates accountability while split ownership guarantees finger-pointing when problems arise. Current staffing reveals whether you're serious or delusional because hiring plans aren't capabilities.

Written compliance frameworks prevent regulatory surprises that kill projects overnight. Each NO identifies exactly where you'll fail, giving you the chance to address these fundamentals before joining the graveyard of failed AI initiatives.

How to sell this to your board

Your board meeting is next week and they want to know if your AI project deserves funding or termination. Most presentations fail because they bury business value under technical complexity, but you can get approval in three slides by answering exactly what boards need to know.

Slide one builds credibility through transparency. List the five reality-check questions vertically with three columns: Evidence, Owner, and Mitigation. Populate each cell with one factual statement. This demonstrates you understand both opportunities and risks, earning trust because boards fund leaders who acknowledge challenges, not those who pretend they don't exist.

Slide two forces clarity through simplicity. Display your readiness score in a large circle, green for 4-5 YES answers, red for less, with nothing else on the slide. This creates immediate visceral understanding where board members grasp your preparedness instantly, setting up the exact conversation you need to have.

Slide three enables decision through specifics. Top left shows the precise metric you'll improve (reducing customer churn from 12% to 8%), top right shows realistic timeline (6-month pilot, 12-month rollout), and bottom shows maximum exposure ($2M cap before mandatory review). This gives directors everything needed for informed approval in seconds.

Start with business problems, not technology features. Assign clear ownership. End with specific requests. Boards reward this clarity and accountability because it shows you've thought through execution, not just ambition.

Why you need a failure budget

You're about to waste millions pretending your AI project can't fail when reality shows most AI pilots never generate positive returns. The solution isn't avoiding failure but planning for it intelligently, just like venture capitalists who assume most investments fail but structure portfolios where exceptional winners compensate for routine losses.

Apply this thinking by allocating AI budgets across three categories with disciplined ratios. Dedicate 10% to transformational moonshots that probably won't work but could revolutionize your business. Assign 20% to proven approaches successfully implemented by competitors. Reserve 70% for rapid learning experiments designed to fail fast and cheap.

This allocation protects capital while preserving upside because learning experiments become your competitive advantage when you structure them with tight scopes, fixed timelines, and binary success metrics. Over half of venture funding now targets AI despite high failure rates because sophisticated investors budget for learning, not just success. They track each category independently and terminate projects dispassionately when they exceed allocated resources.

Without this approach, every setback triggers crisis meetings and blame assignments. With it, setbacks become expected data points that transform AI investment from gambling into portfolio management. Call it strategic learning investment or innovation reserves, but establish it before spending begins so failure becomes education, not catastrophe.

How vendors trap you (and how to escape)

That transformative AI vendor making impressive promises is constructing three traps that won't activate until you're too deep to escape. Understanding these mechanisms before signing protects your organization from costly lock-in that destroys budgets and stalls innovation.

Price escalation starts with attractive entry costs that establish dependency. After integration, usage-based pricing models activate and infrastructure costs can increase substantially within 18 months as introductory rates expire. Your workflows now depend on their platform, making migration painful. Data imprisonment tightens the grip when your proprietary information trains their models.

When you need to leave, data export becomes a complex negotiation where organizations routinely pay substantial fees to retrieve their own information. Feature manipulation maintains dependence through critical capabilities that remain perpetually "in development" while you wait months for functionality that never materializes properly.

These traps devastate growth-stage companies where speed overrides scrutiny and contract details get minimal review. When surprise costs emerge, negotiating leverage has evaporated. Prevention requires three non-negotiable terms before signing. First, mandate data portability with contracts guaranteeing complete export within 30 days at standard storage rates.

Second, conduct annual exit simulations by actually attempting data extraction to expose hidden dependencies. Third, limit contract terms to one year maximum because short commitments maintain flexibility and competitive pressure. Vendors resisting these terms reveal their retention strategy relies on lock-in, not value. Make these protections mandatory or find partners who compete on merit rather than entrapment.

Write your divorce papers first

Before building anything, design its termination. Most transformations fail, so assuming success while ignoring this reality is delusional. Sophisticated leaders plan exits before entries because it transforms risk from existential threat to manageable cost.

Your termination plan must address four scenarios while judgment remains clear. Define automatic shutdown triggers including model accuracy below 80%, costs exceeding 2x budget, data breach occurrence, or regulatory violation. Make these mechanical, not subjective, so emotion can't override logic. Establish data recovery protocols ensuring all models, datasets, and configurations transfer within 30 days in documented formats.

Set spending ceilings like disciplined investors who establish maximum acceptable losses where reaching limits triggers mandatory stops, not emergency funding requests. Secure knowledge retention by requiring vendors to document architectures and train your staff so intellectual property remains with you regardless of project outcome.

This plan delivers benefits beyond risk mitigation because companies with governance frameworks receive superior service. Providers know you can leave, so they work to keep you. Negotiations accelerate because terms are predetermined. Pivots become possible because switching costs are contained.

Document these terms before excitement impairs judgment so when projects struggle, you'll execute predetermined plans rather than make panicked decisions. Plan the divorce before the honeymoon, then work to make it unnecessary.

The talent problem nobody admits

Post a data science position and watch hundreds of applicants demand astronomical salaries. You hire promising talent at premium prices, then six months later, larger companies poach them with better offers. The expertise you developed walks out the door, forcing you to restart the expensive cycle. This pattern destroys AI initiatives faster than bad code ever could.

The problem compounds because growing companies compete on compensation alone, betting future funding covers current costs. When capital markets tighten, these talent strategies collapse spectacularly. The solution requires abandoning Silicon Valley's talent arms race and creating a game you can actually win through systematic capability building.

Pay premiums only for senior architects who design systems and develop others, multiplying capabilities rather than just contributing individually. Build balanced teams where these experts collaborate with traditional developers and domain specialists so knowledge transfers organically and risk distributes across people. Implement comprehensive training programs that transform existing staff because data literacy basics dramatically improve adoption rates and reduce resistance. Establish a chief AI officer role with genuine authority to coordinate efforts and prevent redundant initiatives.

Most critically, codify everything obsessively by documenting every architectural decision, failed experiment, and learned lesson. Code reviews become teaching opportunities while standard procedures capture tribal knowledge. When people leave inevitably, institutional memory persists because you're constructing organizational capability, not renting it through employees.

The numbers that matter

You're tracking the wrong metrics because most AI dashboards display impressive-sounding numbers that predict nothing about value creation. Here are four measurements that actually indicate whether you're building or destroying value, allowing you to spot problems before they become disasters.

ROI per engineer reveals talent productivity by calculating revenue attributable to AI initiatives divided by total investment in data science salaries and infrastructure, normalized per $100,000 invested. This ratio must increase quarterly or you're adding headcount without impact. Focus ratio exposes discipline by dividing profitable AI projects by total active projects. Winners maintain ratios above 0.5 by concentrating on three or four initiatives while losers spread across six or more, achieving nothing meaningful anywhere.

Training penetration measures organizational readiness through percentage of employees completing AI fundamentals training. Low penetration guarantees resistance because people fear what they don't understand, while higher penetration creates demand pull rather than technology push. Governance maturity predicts execution success since organizations with formal AI steering committees achieve 40% better outcomes, but only when committees have budget authority and project termination power.

These metrics interconnect to reveal systemic health. Declining ROI per engineer combined with falling focus ratio means you're dissipating talent across too many initiatives. Low training penetration explains why technically excellent projects fail adoption. Absent governance structures guarantee political infighting.

Monitor these monthly because they identify problems quarters before financial metrics show damage and prescribe specific corrective actions. Truth lives in simple numbers while everything else is theater.

Learn to say no (and mean it)

Every AI proposal sounds revolutionary in PowerPoint, but approve them all and you'll create an expensive graveyard of abandoned prototypes. The discipline to reject good ideas in favor of great ones separates successful transformations from expensive failures because organizational capacity, not technology, constrains success.

Successful companies focus on three or four AI initiatives while failures attempt six or more. Each project requires executive sponsorship, technical talent, clean data, and change management. Spread these resources too thin and nothing succeeds. The solution demands courage: reserve 30% of your AI budget for absolutely nothing.

This forces every proposal to compete for truly scarce resources rather than assuming availability, creating the discipline that drives results. Implement ruthless scoring by evaluating proposals on executive championship strength and revenue impact potential. Only high scores on both receive funding while everything else goes on a public rejection list visible to all stakeholders. Review quarterly, but graduation requires new evidence or sponsors, not persistence.

This transparency prevents political maneuvering that corrupts focus. Small companies maintain this discipline naturally because capital scarcity enforces choices. Large organizations must artificially create similar constraints or drown in well-intentioned experiments. That 30% buffer protects against your own enthusiasm, transforming random experimentation into strategic betting.

Fewer projects yield superior results and create a strategy everyone understands because the ability to say no creates the focus required to achieve something meaningful.

The enemy inside your walls

Your AI project won't die from technical failure. Internal stakeholders who benefit from its failure will systematically dismantle it using three predictable tactics that succeed in most failed transformations.

Scope inflation begins when each meeting adds "critical" requirements until the project collapses under undeliverable complexity. Information hoarding emerges when previously accessible datasets suddenly face "privacy concerns" or "compliance restrictions" that didn't exist last quarter. Success redefinition delivers the killing blow as performance metrics shift from accuracy to speed to cost to satisfaction, making success impossible when victory conditions change monthly.

This happens because AI threatens organizational power structures by shifting budgets, redistributing authority, and evaporating job security. Fear drives sabotage, but expressing fear appears weak, so people attack the project using procedural weapons. Early warning signs include mysterious delays in data access, requirement documents that expand without adding clarity, and presentations that substitute feelings for metrics. Recognize these signals to act before damage becomes irreversible.

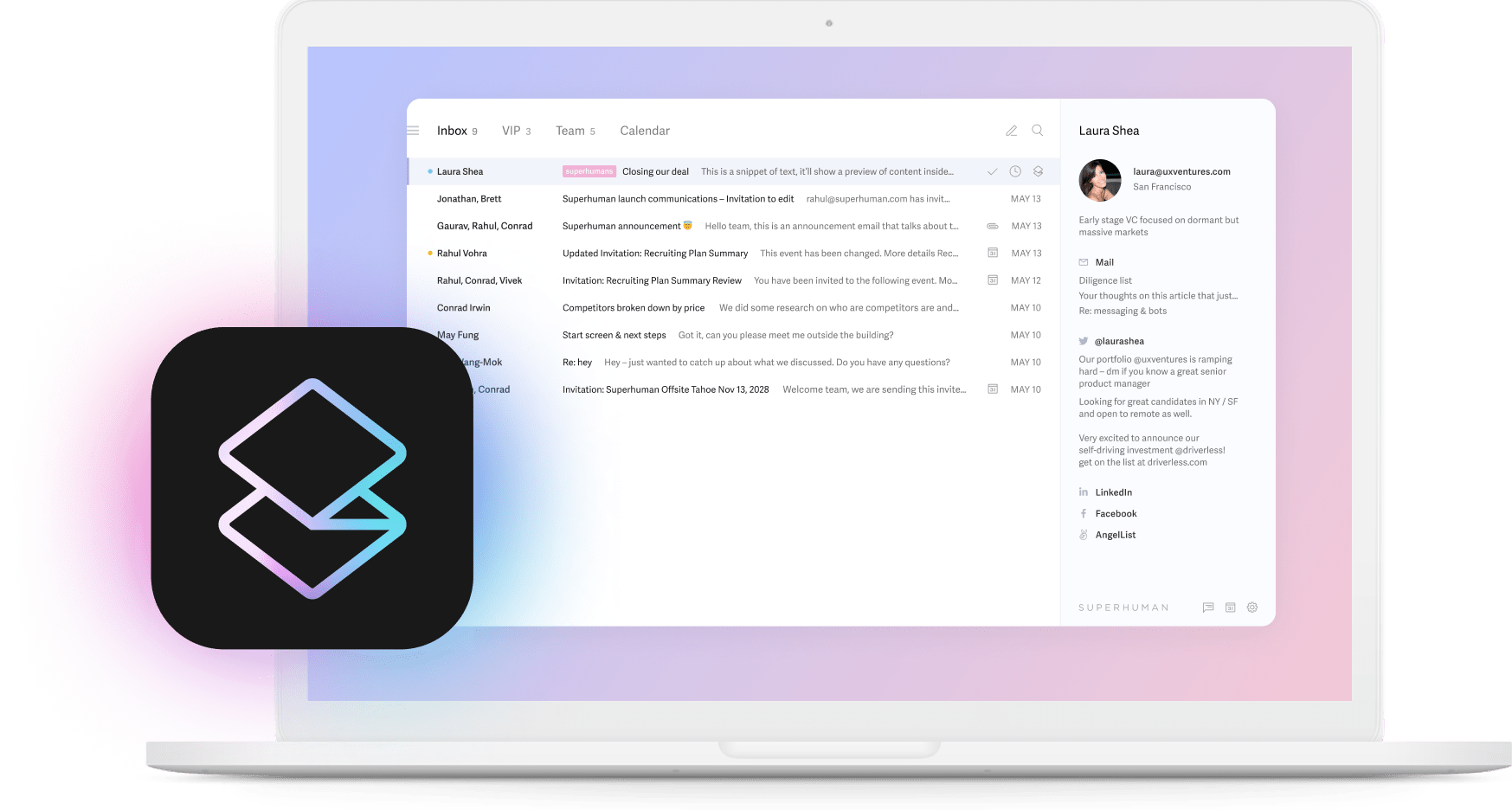

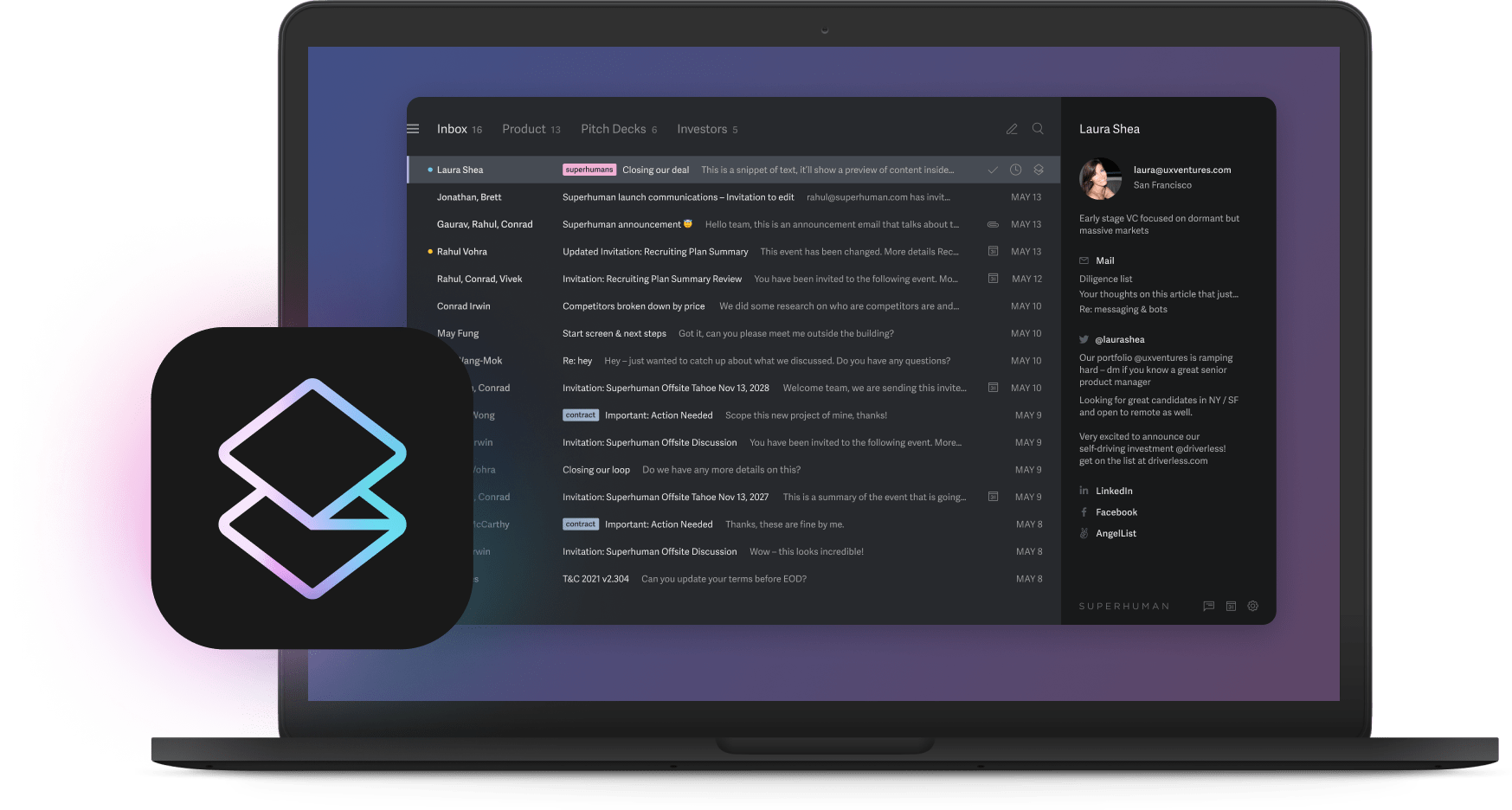

Address resistance by meeting opponents individually and asking what negative outcome they fear from this project's success. Named fears become manageable while hidden fears metastasize. Restructure incentives so AI success benefits everyone because when everyone wins together, resistance evaporates. Ensure transparent communication using tools like Superhuman's Shared Conversations that enable teams to collaborate openly on decisions, eliminating hidden agendas.

For immovable obstacles, facilitate transitions elsewhere because high-trust cultures remove blockers quickly when organizational success supersedes individual preservation. Make resistance expensive while making cooperation profitable, then watch behavior align with rewards.

Build your one-page battle plan

Everything required to manage AI strategy fits on one page because complexity enables confusion and confusion hides failure. This single page answers critical questions and enforces disciplined execution better than any lengthy report or presentation ever could.

Your one-pager contains five sections that prevent every common failure mode. Project scores list each initiative with its 5-question assessment where only 4+ scores receive funding, preventing wishful thinking. Capital allocation displays your three investment buckets with specific amounts (10% moonshots, 20% proven concepts, 70% learning experiments), enforcing portfolio discipline. Exit protocols detail vendor termination triggers, data recovery deadlines, spending caps, and knowledge transfer requirements in one line per vendor, preventing lock-in.

Rejection list shows your politics-versus-value grid identifying what you're not pursuing, maintaining focus. Succession planning identifies who assumes each critical role if key people leave, ensuring continuity. Review quarterly with stress testing by simulating a vendor tripling prices or your AI lead resigning. Can you respond immediately? If not, address gaps now.

Companies that concentrate on few projects outperform those managing many because focus beats activity. This page answers the board's fundamental question about accountability when things go wrong, transforming AI from mysterious technology into manageable business initiatives. Print it, distribute it, and let your CFO challenge it. When they can't break your plan, you're prepared to invest without gambling because most AI projects fail when complexity obscures accountability.

Your one-pager makes both impossible to hide.