Your automation projects flood your inbox with alerts while you wait months to know if they actually work. You've fed developer hours, cloud spend and executive attention into AI automation for quarters without clear ROI data. Meanwhile, competitors figure out what works and scale successful processes while you're still measuring.

Here's what matters: Most professionals expect AI to drive at least a 3x increase in productivity over the next five years, but most companies wait for quarterly reviews to evaluate automation ROI. That's too slow when business processes shift monthly and email workflows reveal success signals daily.

You need to know within 30 days whether your AI-powered automation projects make money or burn it. This four-phase approach tracks what matters: establish baselines, monitor usage, follow financial impact, then decide. You'll prove your automation works or cut losses fast while competitors wait for quarterly reports.

The secret is measuring automation through email workflows. Email metrics move faster than traditional dashboards, giving you ROI signals weeks earlier.

The framework that cuts through automation noise

Traditional ROI models tell you what happened last quarter when you need to know what's happening today. Track automation progress through email workflows in days, not quarters, and you'll spot winners and losers while competitors collect data.

Start with three questions: Are people using automated processes for real work? Are business processes making or saving money right now? Can you shut down failing automation cleanly?

Companies with structured frameworks see ROI within nine to 12 months compared to 18-plus months without clear metrics. Email-based tracking accelerates these timelines because automation generates immediate email feedback once it takes hold.

The framework breaks into four phases. Day 0 captures current process costs and throughput using email volume as a business activity proxy. Days 1-7 show whether teams depend on automated processes or ignore notifications. Days 8-21 track cash flow through faster approvals and reduced manual processing. Days 22-30 force the decision: scale, fix or kill.

Day 0: Build your automation safety net

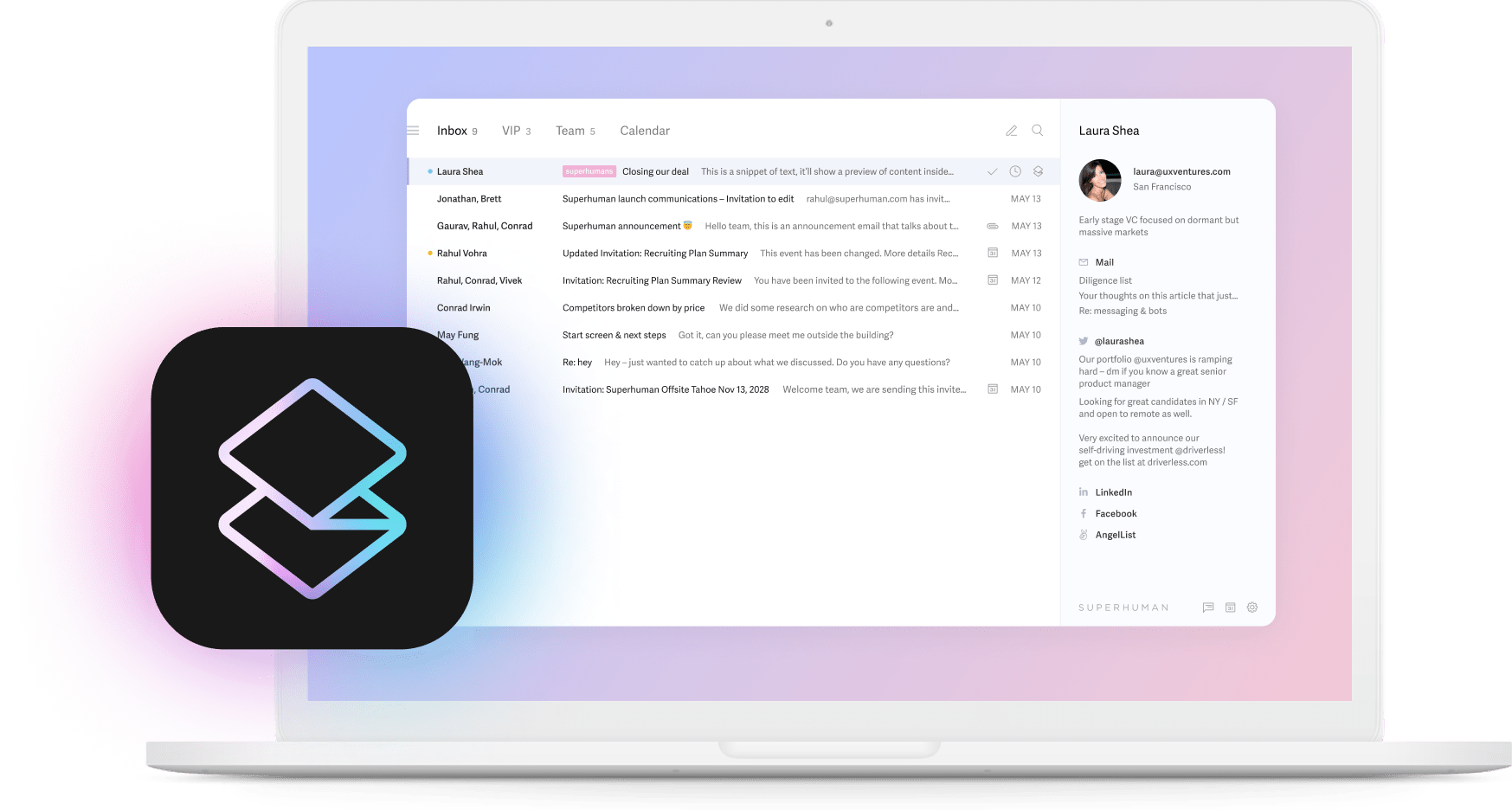

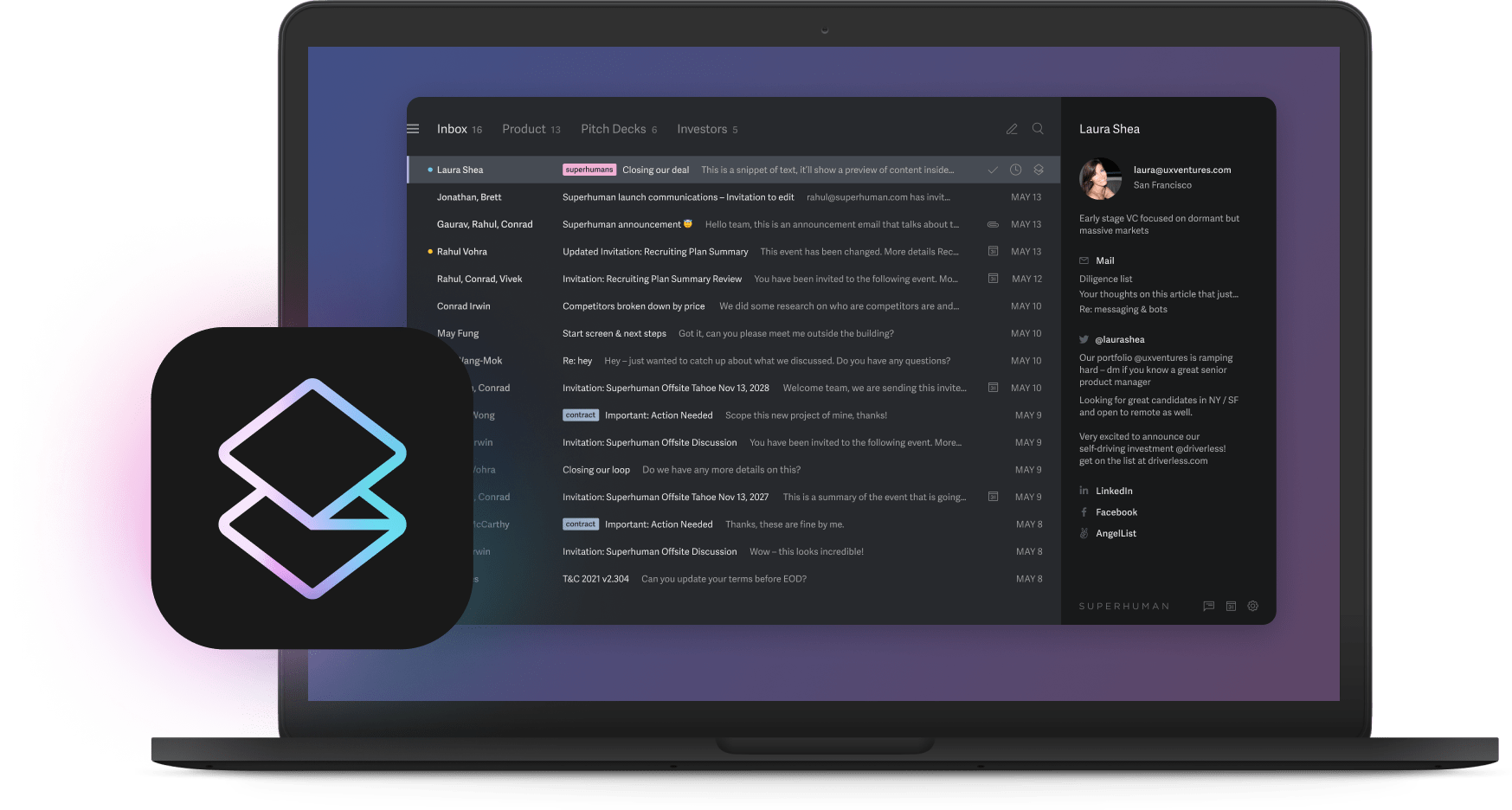

Before processing a single automated workflow, record three baseline numbers: current cost per transaction, error rate for manual processes and revenue per completion. Track these through email volume and response patterns using Split Inbox to organize measurements cleanly.

Automation frameworks emphasize turnaround time and error reduction as indicators that move quickly once automation takes hold. Email workflows reveal these changes faster because automated processes generate immediate feedback through notifications and status updates.

Set up automated spending alerts delivered through email. Write a script that checks your billing API and sends warnings when costs jump. Split Inbox ensures critical financial alerts don't get buried among process notifications.

Your kill switch needs four parts. One-command rollback through feature flags that complete in seconds. Pre-written email templates stored in Snippets for instant deployment. Clear ownership with one person who can make the call without approval. Multiple layers at process, application and network levels to prevent partial failures.

Test emergency procedures with a tabletop exercise. Practice alerts, rollback and restart until every step finishes within 10 minutes. Use Shared Conversations to coordinate tests without cluttering individual inboxes.

Days 1-7: Track real business process adoption

The first week separates real automation impact from PowerPoint success. What matters is whether teams choose automated processes when they have actual work to finish.

Track Daily Active Process Users as a percentage of people who could use automated workflows. Measure process routing percentage — how much work flows through automation versus manual completion. If your system handles 15% of approvals while managers process 85% manually, you have adoption problems that email patterns reveal immediately.

Research shows depth of usage predicts long-term success better than breadth. Use Read Statuses to track whether process notification emails get opened and acted upon, revealing adoption patterns within days.

Watch override rates and manual completion percentages for signs people don't trust your automation. Rising abandoned processes — where staff start with automated workflows then finish manually — indicate broken systems. Email traffic patterns show these problems faster than process dashboards.

Talk to team members for five minutes each to hear what analytics don't capture. Comments like "automated processes take longer than manual work" predict problems before dashboards show declining usage.

Warning signs appear quickly. Daily automation usage dropping three days running, override rates above 30% or adoption stuck below 20% point toward failure. Companies monitoring email-driven metrics can redirect resources before losses accumulate.

Days 8-21: Follow automation dollars

These two weeks focus on one question: Do your automation projects create more value than they cost? Build a daily financial summary using Auto Summarize to process automation reports faster, showing money flowing in and out on one screen.

Track five numbers: revenue increases from faster processing, direct costs including infrastructure and licenses, error correction expenses from automation mistakes, unexpected infrastructure spikes and net result marked green or red. Email volume from each process provides real-time financial indicators.

Faster automation drives revenue because you handle more transactions with the same team. AI saves professionals at least one full workday weekly, and process automation amplifies these gains. Lower error rates reduce refunds, rework costs and complaints.

Automation initiatives commonly deliver 40% to 70% reductions in process time within the first month. Catching fraud through automated monitoring can justify entire project costs with single events.

Watch for creeping costs. Training expenses accumulate through workshops and coaching. Engineering time for integration doesn't always hit obvious budget lines. Rework from automation failures eats projected savings when people fix what AI-powered systems broke.

Some benefits matter strategically. Faster approvals improve customer satisfaction. Less repetitive work reduces turnover. Automated processes create competitive gaps that compound over time.

Use Shared Conversations to keep teams aligned on financial results without endless email chains. By Day 21, you'll know in dollar amounts whether automation projects make or lose money.

Days 22-30: Make the automation call

By Day 22, your data tells a clear story demanding action, not analysis. Use simple math: positive returns mean scale, break-even means fix, negative returns mean kill.

Present findings in five slides using Superhuman AI to draft clear updates. Show the headline number for net return. Display usage trends and daily financial impact. State your recommendation clearly. List specific next steps with timelines.

Scale when winning. Positive returns justify expanding while protecting what works. Plan your next two experiments covering more workflows. Keep daily cost monitoring and track improvements so gains stay visible as automation scales.

Fix when breaking even. Break-even results call for a focused two-week improvement sprint. Rank problems by financial impact: expensive error correction first, minor issues last. Set clear success targets like 10% faster processing or 15% higher adoption.

Kill when losing money. Negative returns mean stop immediately. Use your Day 0 kill switch to disable automation while keeping other systems running. Save logs, write a brief summary of what didn't work and move resources to better opportunities within 24 hours.

Leaders want decisions, not explanations. Start with the bottom line number compared to baseline. State your choice clearly. Give one sentence on risks and one on opportunities.

Turn speed into competitive advantage

Reserve two hours monthly to ask one question about every automation project: Does this still make money right now? Bring together leaders, engineers, finance and operations with current performance data.

Keep meetings focused. Spend 10 minutes reviewing Day 0 metrics. Look at adoption trends, daily financial summaries and system problems. Vote quickly: green to scale, yellow to fix, red to kill. Allow 15 minutes discussion per project. Time pressure forces decisions based on results, not presentations.

Industry-leading companies are three times more likely to report significant AI productivity gains because they measure what matters and act quickly. They use Instant Reply to respond to automation alerts faster and coordinate teams around data-driven choices.

Make this approach stick with three rules: assign executives who can overrule territorial disputes, integrate reviews into existing meetings instead of adding new ones and store decisions in dashboards that update automatically.

Start using the 30-day framework before competitors do. Monthly discipline keeps your automation portfolio focused on processes that generate cash rather than reports. Stop funding automation that sounds innovative but doesn't improve process speed. Start knowing within a month which investments create value and which need to end.

Teams using Superhuman save four hours per person weekly by making email workflows effortless. Apply that efficiency to measuring automation success, and you'll optimize AI-powered projects while competitors wait for quarterly reviews.