The average professional gets 121 emails every day and takes about 12 hours to reply. This creates three problems: time vanishes to writing and rewriting, sensitive data sits exposed without proper protection, and teams duplicate responses or lose context.

The right AI email reply generator changes this. These tools draft replies that match your voice, encrypt data properly, and help teams coordinate without stepping on each other.

Here's how to find one that works. You'll learn to eliminate bad vendors fast, define what success looks like, and separate must-have features from nice-to-haves. Then you'll test the best options and roll out the winner.

Evaluation checklist

Want to save yourself from sitting through demos that go nowhere? Spend two minutes on this checklist first. If any vendor can't check every box, they're not worth your time.

- Does the platform encrypt everything, both when emails travel and when they sit on servers? Can they show you a current SOC 2 report or prove they're GDPR compliant?

- Will the AI understand email conversations or just treat every message like it's the first one? Can you control the tone so replies match your brand voice and follow your email format?

- Will this thing save at least 4 hours per person every week? If not, why bother?

- Does it work with Gmail, Outlook, or whatever email app you're using without requiring weird workarounds?

- Are collaboration features built in so teams can share templates, see ongoing conversations, and avoid stepping on each other?

- Can different departments adjust settings and tone without needing a programmer?

- Is pricing clear upfront with no surprise fees?

- Will you get help from real humans during setup and after?

Miss even one of these and you're looking at a tool that'll slow you down instead of speeding you up. Miss two or more? Move on immediately.

Define team needs and success metrics

Before you look at any tool, figure out what's broken right now. Could be your sales teams hunting through old emails while prospects go cold, or your operations field the same process questions daily.

Turn these problems into measurable goals. Sales might cut prospect reply time from eight hours to two. Support could target under-60-minute responses with 90%+ satisfaction scores. Leadership might track the same-day handling of urgent messages. Operations could measure emails flowing directly into project systems.

Create a simple worksheet before talking to vendors, then give each team a row for current problems, desired outcomes, and success metrics. Add baseline numbers: current response times, weekly email volume, time spent writing.

Track everything for one week before testing. Compare numbers side by side when trials end. If the tool doesn't hit your targets, keep looking.

Shortlist and compare top tools

Now you can match products to your success metrics. Look for time savings, security fit, and collaboration strength. Run a quick feature check and drop anything that misses two or more non-negotiables before you test anything.

Six tools made it through that filter.

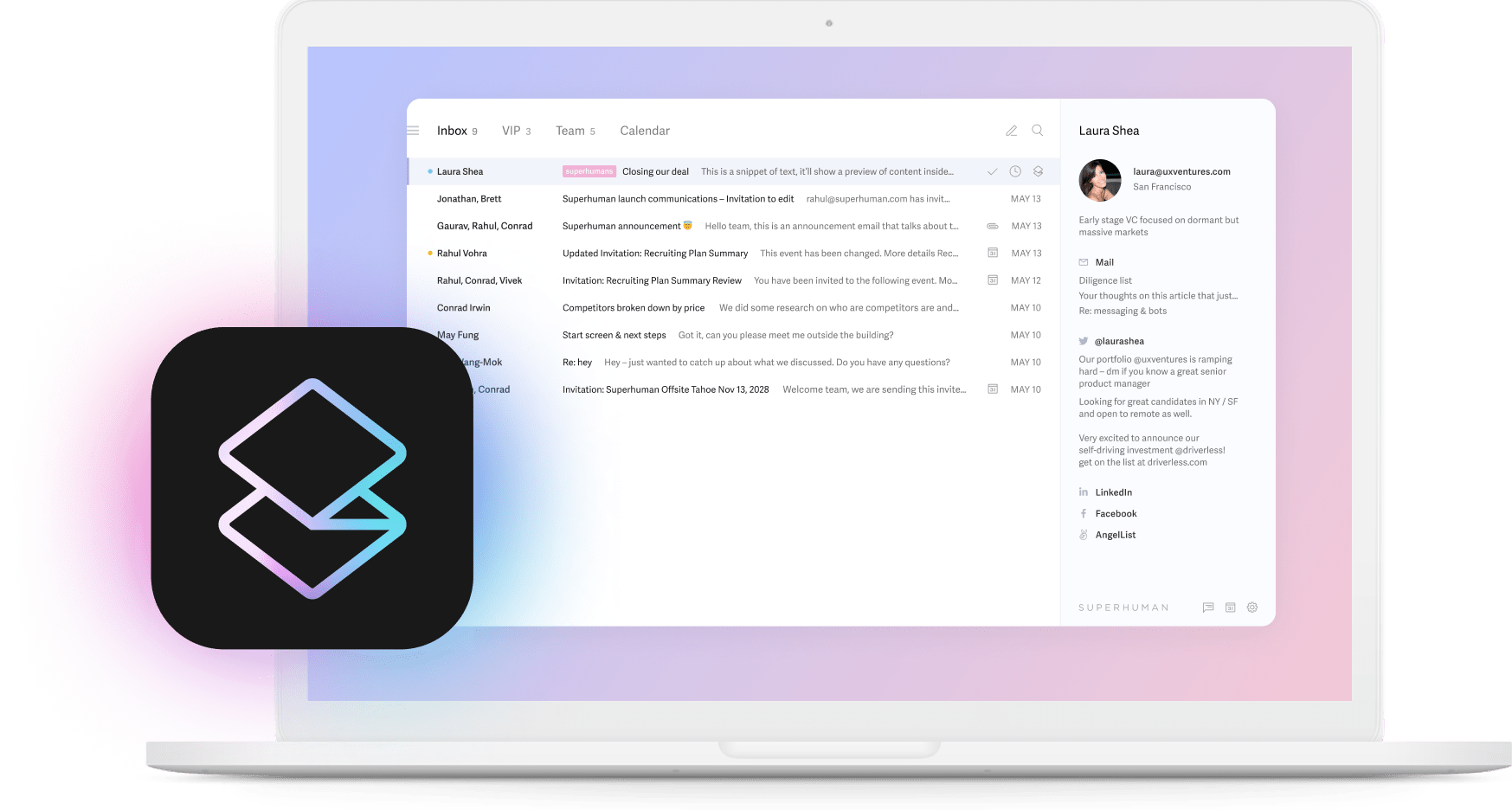

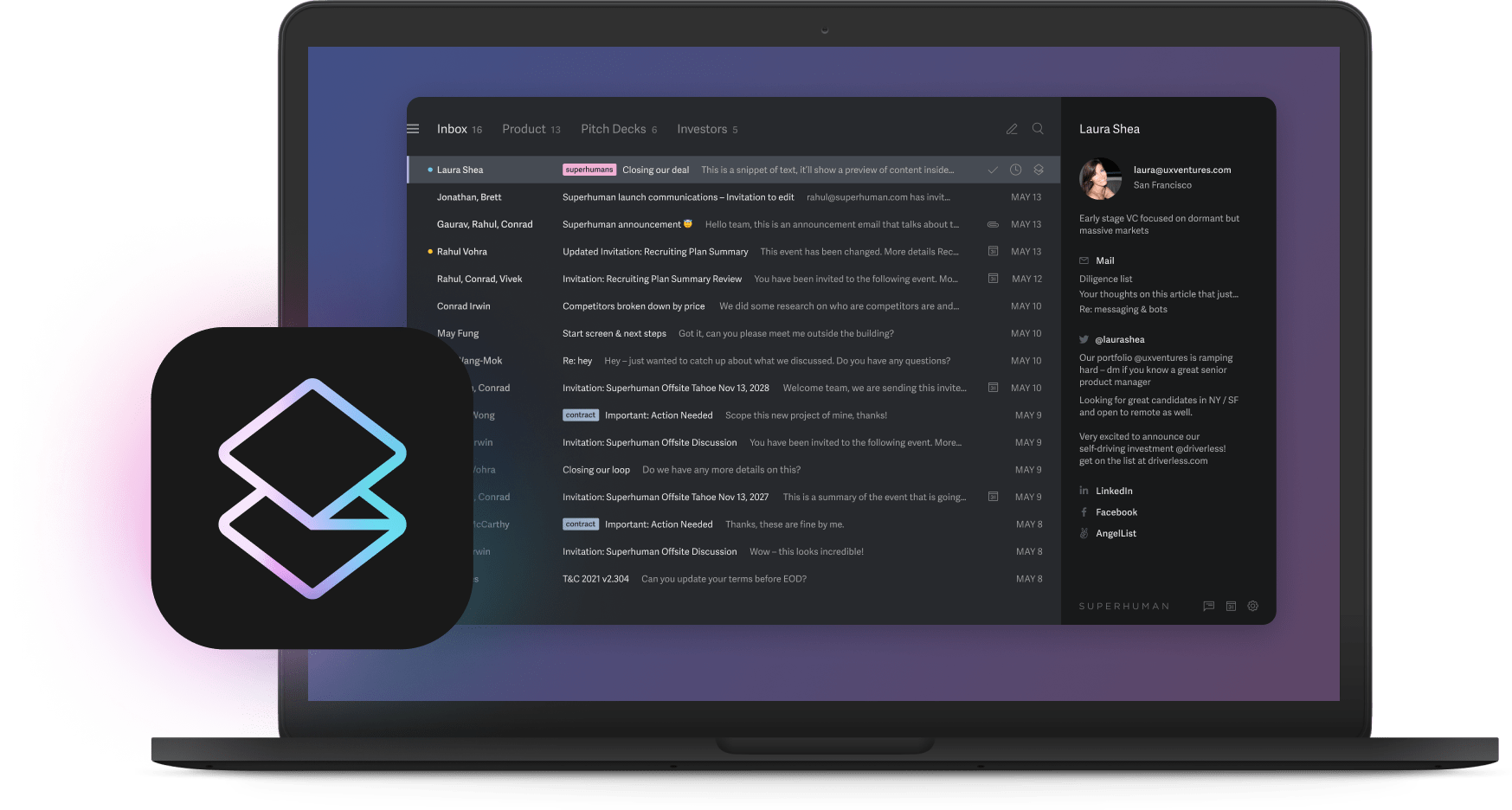

Here's how to narrow this down today. Need strong security and team analytics? Pick Superhuman, then let compliance review data handling.

Want zero-setup speed for a small team? Gmail Smart Reply or SiteGPT gets you moving in minutes.

Doing lots of outreach over conversation intelligence? Mailmeteor's templates serve you well.

Tight budget but want tone flexibility? Start with AIFreeBox and see if missing analytics hurts coaching.

Take your top two into detailed testing. A focused trial proves real-world time savings before you spend money and political capital.

Deep-dive testing process

Give yourself one week to prove which AI solution will lighten your inbox. No guessing, just data. Set up a sandbox with representative email accounts. You can experiment without risking real customer conversations.

Split people into two equal groups. The control group keeps working the old way. The test group adds your shortlisted AI solution. Match team roles, inbox volume, and email types so comparisons stay fair.

Define your measurements before sending anything. Focus on numbers that show real productivity changes, not vanity metrics.

- Average reply time

- Lead response rate

- Time saved per team member

- Customer satisfaction or internal NPS

- Accuracy score for AI-generated drafts

- Adoption rate after day three

Set up simple tracking. A spreadsheet or dashboard should capture date, inbox label, sender type, draft quality rating, and whether replies need editing. Color-code wins and misses so patterns jump out.

Day-by-day checklist:

- Day 0: Snapshot current metrics

- Day 1: Onboard test group, clarify what AI should draft and what stays human-only

- Days 2–6: Run real scenarios like sales follow-up, customer escalation, and internal updates. Flag any off-brand tone or security concerns immediately

- Day 7: Pull data, compare averages, hold a quick review with both groups

Include voices from sales, support, operations, and security. Diverse testers catch blind spots faster and help gauge company-wide adoption.

Score each platform against your success criteria. Did it cut average reply time by at least 50%? Did people feel their inbox got lighter? Rank the contenders, pick your top two, and move straight into broader testing.

Numbers tell the truth better than any demo.

Final decision

The right AI email solution changes how teams think about communication. Quick, consistent replies make customers feel heard. Manageable inboxes give people time for valuable work. When AI handles routine responses well, humans focus on conversations needing judgment.

Choose wisely, implement thoughtfully, and watch what happens when your team stops fighting their inbox and starts flying through it.