Your competitors ship AI features while you're stuck in planning meetings. They go from idea to customer deployment in three weeks. You've been working on the same pilot for six months.

The disconnect between AI investment and actual deployment is staggering. While companies pour billions into AI initiatives, most employees never touch these tools in their daily work. This 30-day framework changes that equation entirely.

A month is all you need

Four weeks from today, you can have AI solving real problems for real customers. Functional software that people use, not another PowerPoint deck about future possibilities.

The timeline breaks down simply. First week, identify one workflow that wastes time and assign someone to fix it. Second week, build a basic version using whatever data you can access today. Third week, give it to actual users and watch what happens. Fourth week, look at the results and decide whether to expand or abandon.

Most companies fail because they overcomplicate everything. They want perfect data before starting. They need unanimous buy-in from every stakeholder. They plan for six months before writing any code. Meanwhile, smaller competitors ship three versions and capture market share.

85% of CEOs expect AI to transform their industry, yet only 20% have concrete plans to make it happen. That gap exists because executives confuse talking about AI with actually building AI. The companies winning this race focus on shipping code, not shipping presentations.

Week 1 means picking one problem

Monday morning starts with pain identification. Document the workflows that consume the most time across your organization. Processing time, error rates, manual interventions. Pick the one with the highest cost to your business.

Tuesday requires choosing an owner. One person who succeeds if this works and fails if it doesn't. Give them budget authority. Let them make decisions without committee approval.

Wednesday focuses on defining success. Pick a metric everyone understands. Cut processing time by 60%. Reduce errors by half. Handle twice the volume with the same team. Make it specific enough to measure in two weeks.

Thursday means facing reality about your data. Stop waiting for perfect information that will never exist. Audit what you have right now. Transaction logs, customer records, operational metrics. That existing data is your starting point.

Friday requires clearing roadblocks. Give security and legal exactly 24 hours to identify specific, actionable concerns. If they can't articulate real risks by end of day, you proceed.

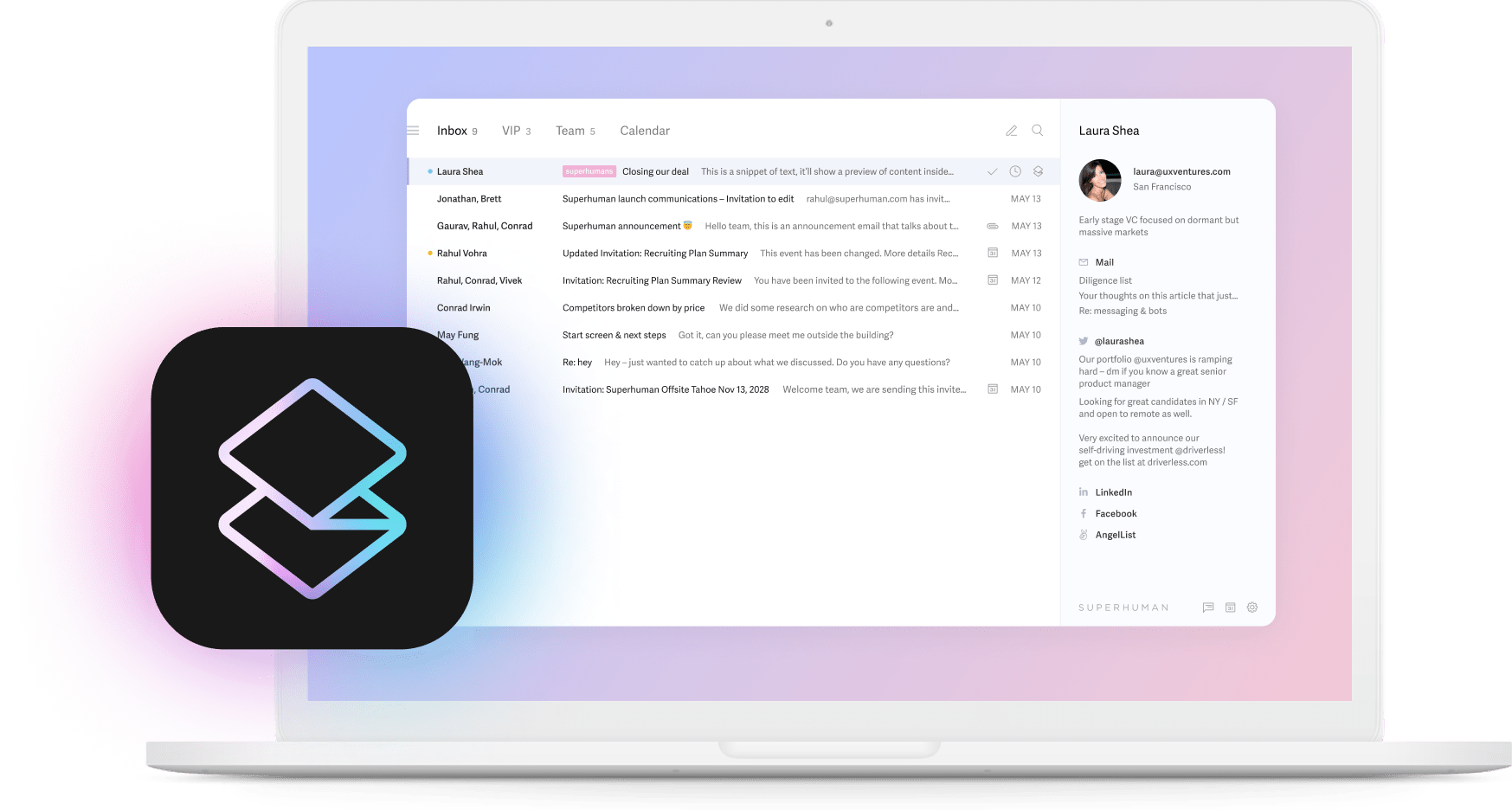

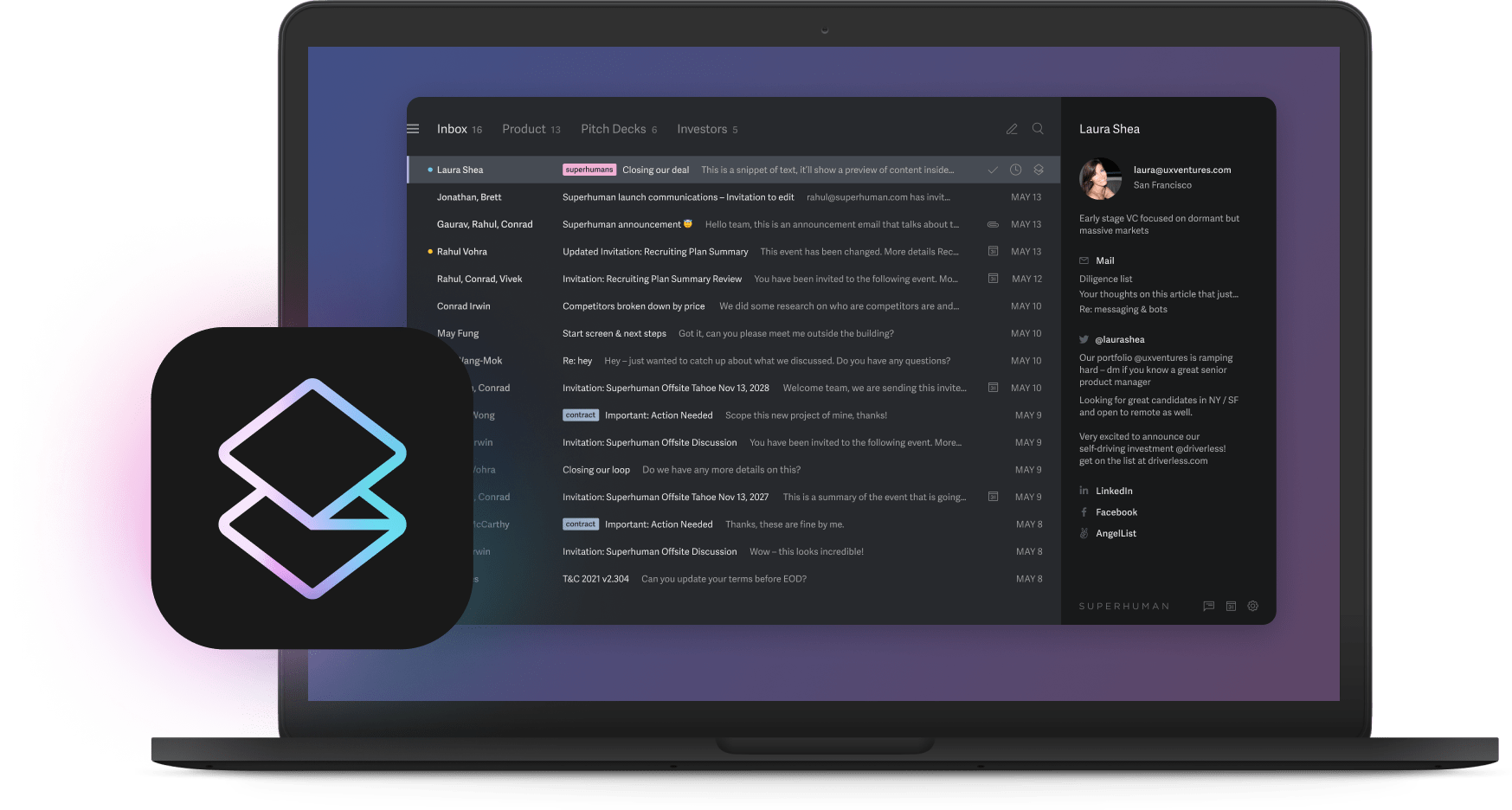

Superhuman's approach to AI email features demonstrates this speed. They went from concept to customer deployment in days. Their users now save significant time with AI features. That rapid deployment and feedback cycle shaped their entire product strategy.

Weeks 2 and 3 separate winners from wishful thinking

By day seven, you know if your prototype actually helps anyone. Users either adopt it immediately or ignore it completely. The data shows improvement or it doesn't.

Day ten demands a decision. Good metrics mean you increase investment. Bad metrics mean you stop immediately. Resources are finite. Time is finite. Spending both on failing projects destroys companies.

Day fourteen determines direction. Strong projects get more resources and expanded scope. Weak projects get documented lessons and quick termination. Then you pick the next problem and start the cycle again.

Focus on three measurements that actually matter. First, adoption rate tells you whether people choose to use your solution when they have alternatives. Second, time saved per user shows genuine productivity improvement. Third, error rate indicates whether AI helps quality or hurts it.

Test everything properly. Give half your users the AI solution while the other half continues working manually. After 48 hours, compare their results. Which group accomplished more? Which group made fewer mistakes? Which group wants to continue? Controlled experiments beat opinion surveys.

46% of leaders blame skill gaps for slow AI adoption, but that's backwards. When tools genuinely help, people learn them eagerly. When tools waste time, people avoid them regardless of training. Build something useful first, then watch adoption follow naturally.

Week 4 decides the future

Day 22 reveals truth without ambiguity. Your metrics improved significantly or they didn't. Users love the tool or they don't. The business case exists or it doesn't.

Successful projects need immediate infrastructure. Write a simple guide that gets new users productive in ten minutes. Record a short video showing the actual workflow. Set up basic support channels. Lock in pricing with vendors while you have leverage.

Force executives to use the tool themselves. Block two hours on their calendar. Make them process real work, not demos. Let them experience the time savings personally. When leaders understand tools through experience rather than presentations, they become advocates instead of skeptics.

Share wins immediately and publicly. Post metrics in company channels. Demonstrate the tool at team meetings. Name the people who made it work. Visible victories create momentum. Hidden successes create nothing.

Organizations that build strong AI cultures see faster adoption across all teams. Culture means accepting that most experiments fail. It means rewarding speed over perfection. It means trusting teams to try things that might not work. Create that environment and watch innovation accelerate.

Smart budgets prevent stupid disasters

Your spending should match your learning, not your ambition. Week one gets $10K to test feasibility. If that works, weeks two and three get $50K to build something real. Only proven success unlocks $200K in week four for scaling.

You can't lose big money on unproven concepts. You can't throw good money after bad results. Value earns investment. Lack of value stops spending.

Choose vendors through action, not presentations. Monday, list three potential partners. Tuesday, watch them demonstrate their solutions. Wednesday, have them build something with your actual data. Thursday, let real users test what they built. Friday, choose based on results or reject them all.

Any vendor who needs three months to show value will never deliver value. Any vendor who can't handle messy data can't handle reality. Any vendor who talks more than they build will disappoint when it matters.

Run security reviews simultaneously, not sequentially. While vendors build prototypes, security teams audit practices. Understand where data goes, how encryption works, who owns outputs, and what deletion rights exist. Get these answers before contracts, not after.

Your internal teams need vendor expertise, and vendors need your business knowledge. Together they move faster than either could separately. This partnership model closes skill gaps without lengthy hiring processes. Smart selection focuses on results and cultural fit equally.

Measuring success and maintaining momentum

Success metrics evolve as projects mature. Day one establishes baselines. Day seven indicates direction. Day 30 validates concepts. Day 90 expects multiple successful implementations.

Create dashboards that executives actually understand. Show adoption rates, time savings, error reductions, and cost improvements. Avoid technical metrics that obscure business impact. Simple numbers tell better stories than complex analytics.

Speed becomes your competitive advantage. While competitors debate approaches, you're testing them. While they plan pilots, you're scaling successes. While they study markets, you're serving customers. Three months from now, you'll have three working implementations while they're still drafting project charters.

The Superhuman State of Productivity & AI Report shows top-performing companies are 3x more likely to report significant productivity gains from AI. These aren't projections. They're measured results from actual usage across thousands of users.

Month two brings three new experiments based on month one learning. Month three scales the winners and terminates the losers. Month four sees AI embedded in standard operations rather than special projects. The cycle continues indefinitely, with each iteration moving faster than the last.

Your board funds results, not potential. Show them completed sprints with real metrics. Demonstrate actual value delivered to actual customers. Present data from users whose work improved. Executed plans beat elaborate strategies. Shipped code beats perfect architecture.

This approach works because it acknowledges reality. Most initiatives fail, so small bets limit damage. Fast cycles accelerate learning. Winners emerge through experimentation, not planning. Companies running ten experiments find two breakthroughs. Companies planning one perfect project find zero.

Start this Monday. Choose one painful workflow. Assign one accountable owner. Define one measurable outcome. Build something basic this week. Deploy to users next week. Measure results the following week. Expand successes or eliminate failures based on data. Repeat this cycle monthly.

The path forward requires no special knowledge or unique resources. Pick problems that matter. Build solutions quickly. Test with real users. Scale what works. Kill what doesn't. Learn from everything. Move faster than your competition. That's how you win with AI while others are still planning to start.