Your inbox overflows with unread messages after every customer meeting. Critical deals slip through cracks while you chase down information buried in endless email threads. Meanwhile, board members pressure you to adopt AI initiatives that feel like expensive experiments with unclear returns.

Such skepticism regarding AI strategy is not a defect, but rather a sign of intelligence. Rushed AI initiatives burn through budgets, create workflow chaos, and often get abandoned within months. Smart leaders use doubt as a strategic advantage, demanding clear ROI and measurable results before committing resources.

What follows is a contrarian framework built specifically for skeptical executives. We'll show you how to turn boardroom doubt into measurable wins through a practical five-step approach that prioritizes containment, fast ROI, and strategic governance. Each phase demonstrates how methodical skepticism leads to AI initiatives that actually deliver bottom-line results.

The skeptic's framework in 60 seconds

Here's the reality: when you start from healthy skepticism, you force every AI idea to clear a high bar for value. That mindset creates a five-step path that turns doubt into dollars.

Contain keeps new models in a controlled environment where any failures stay internal. ROI Fast targets boring automations with 90-day payback periods. Augment focuses on making great people greater rather than replacing talent. Govern adds audit trails, bias testing, and kill switches so nothing escapes oversight. Scale promotes only the survivors that post at least 3x returns with sub-2% error rates.

Structure matters as much as process. Distributed AI leadership across functions often outperforms single Chief AI Officer models, avoiding organizational bottlenecks while accelerating decision-making. Most professionals already save at least one full workday weekly using AI tools, yet most AI adoption remains surface-level, creating vulnerability for competitors who approach the technology systematically. This cautious approach lets skeptical leaders capture real value while others chase headlines.

Step 1: Contain the blast with low-risk internal pilots

Apply containment principles to your first AI pilots by starting with internal tasks that drain hours but stay invisible to customers. Invoice processing, email triage, demand forecasting adjustments, and document summarization make ideal targets because these projects touch company data yet sit far from your brand's public face. Any misfires stay contained within your organization rather than creating public embarrassment or customer churn.

Next, establish a cross-functional AI Center of Excellence that brings Operations to define workflows, Finance to track spending, Data teams to validate inputs, and Legal to flag compliance issues. When these voices collaborate at one table, pilots stay aligned with policy and profit objectives while avoiding the silos that doom most corporate initiatives.

Before writing any code, run every idea through a 90-day readiness checklist that includes defining one success metric that all leaders agree on, using sandbox data only with no production system connections, building a kill switch that shuts down the system within minutes, and scheduling a post-mortem on day one rather than day ninety-one. This systematic approach ensures every pilot stays contained and measurable while providing clear learning objectives.

Stick to this checklist religiously because flashy customer-facing chatbots tempt executives who want headlines, but they also invite public relations disasters when bias or hallucinations slip through. Keep projects internal until the technology proves its reliability, especially since organizations consistently struggle to find qualified technical talent, making mistakes expensive and recovery slow. Staying small, measurable, and reversible lets you learn without burning goodwill or budget.

Step 2: Prove ROI fast with boring AI

The fastest way to calm AI skepticism is targeting dull, repeatable tasks, automating them completely, and showing savings in dollars rather than slides. Simple automations often outperform grand AI platforms because they plug directly into existing workflows, require minimal data preparation, and surface value within weeks. Yet many executives hesitate, citing unclear ROI as their main concern, which causes budgets to stall when payback calculations feel fuzzy.

Consider invoice matching as an example where companies implementing AP automation have achieved substantial annual savings through faster processing and fewer payment delays. Calculate ROI using this straightforward formula: (annual cost of current process minus pilot implementation cost) divided by pilot cost. Present this on a single slide showing current spend, pilot investment, and the bright line representing your delta. If that line doesn't shoot upward within 90 days, terminate the project and move resources to the next experiment.

Distributed approval processes accelerate this cycle because when Operations validates the pain point, Finance verifies the numbers, and IT confirms the sandbox setup, you avoid bottlenecks that emerge when one leader must approve every experiment. Wider involvement delivers quicker decisions and stronger accountability while preventing the analysis paralysis that kills promising pilots.

Watch for these vendor red flags during evaluation:

- References to "proprietary secret data" without disclosure plans that leave you guessing about model capabilities and limitations

- Long-term contracts with escalating pricing that starts low then spikes after you're locked in and dependent on the platform

- Demands for unpaid proof-of-concept work that essentially provide free consulting while giving vendors insight into your processes

All of these signal cost curves you can't predict or control, which undermines the transparency that skeptical leaders demand.

Remember the core rule: if ROI isn't obvious within 90 days, shut it down. Your skepticism just preserved your budget for the next pilot while proving to the board that doubt functions as a feature, not a flaw. When numbers appear in black and white, conversations shift from hype to hard returns.

Step 3: Augment humans rather than replace them

Start every AI conversation with this principle: augmentation over automation makes great people greater. The goal isn't cutting headcount but freeing talented people from repetitive work so they can focus on judgment, creativity, and relationship building. This approach transforms organizational culture while delivering immediate productivity gains.

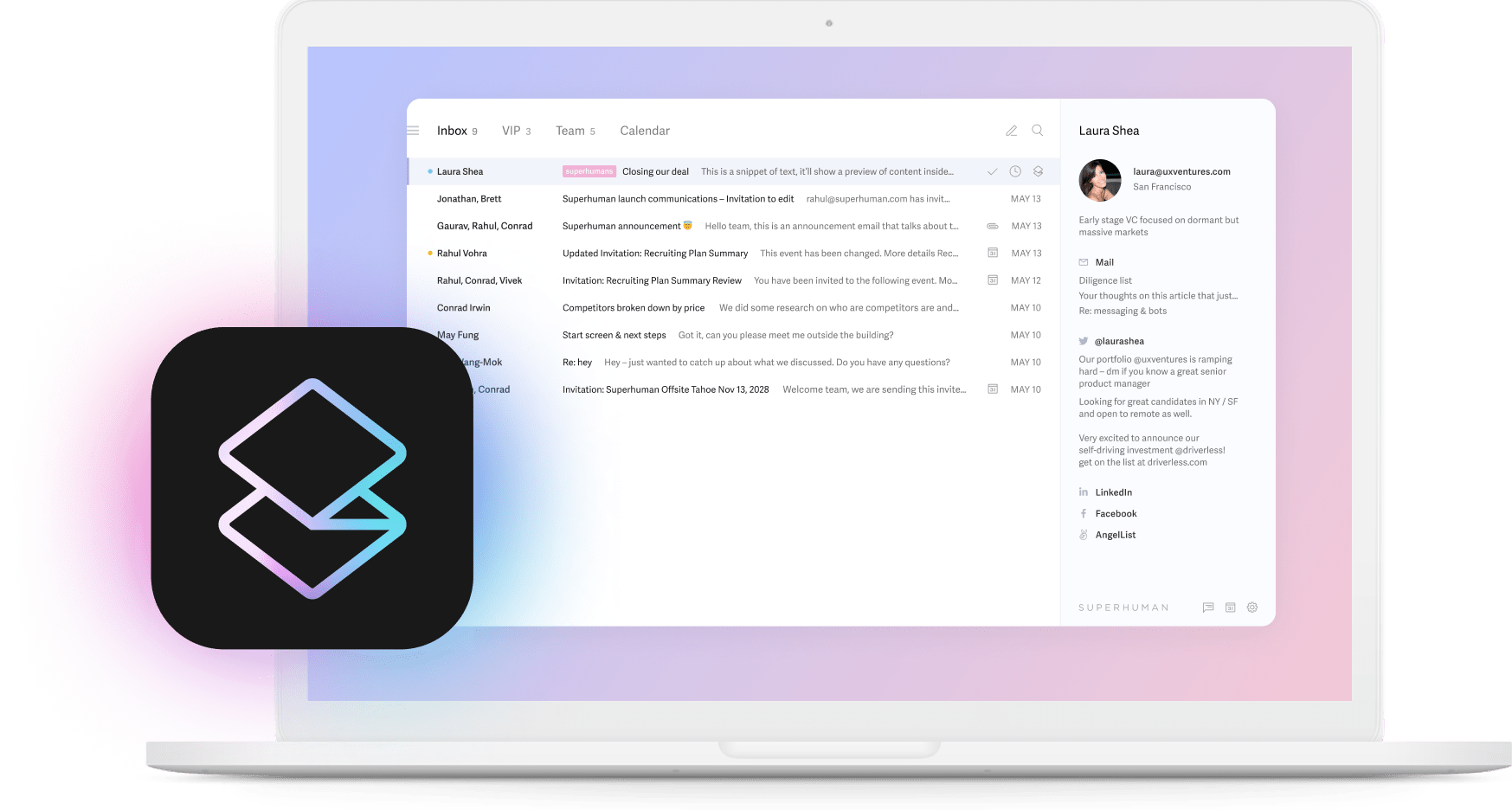

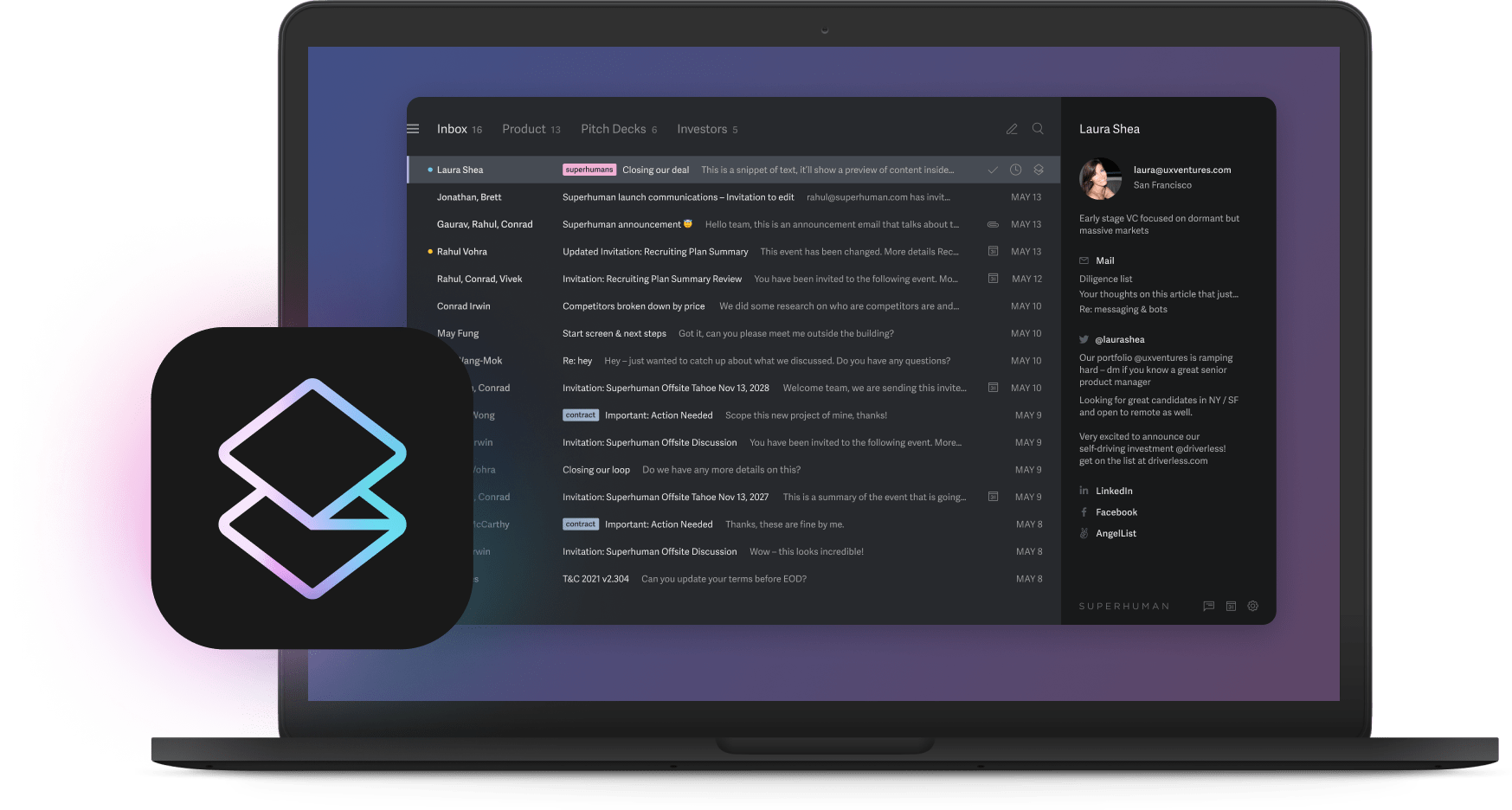

A sales leader with hundreds of unread emails after customer meetings can use Split Inbox to separate urgent client responses from newsletter noise, while Write with AI converts "circle back?" into "Thanks for the great discussion yesterday. I'll have the proposal ready by Thursday and will include the custom pricing we discussed. Let me know if you need anything else before then."

Use a simple matrix to decide where AI fits by placing tasks that combine repetition with high judgment in the "augment" category, such as email responses, RFP drafts, and customer outreach scripts. The machine handles busywork while humans approve nuance and strategy. Purely repetitive, low-risk activities like invoice coding, data entry, and log reconciliation sit in the "automate" category and can run without supervision.

Involve entire teams in creating these tools because this approach transforms culture rather than imposing technology from above. Companies have held internal hackathons where employees shaped their own AI workflows, converting skepticism into ownership. This method surfaces candid feedback early, reveals edge cases, and anchors adoption in real pain points rather than theoretical benefits.

Fear of job displacement still creates resistance, so counter it with transparency by explaining that AI will handle repetitive parts of roles while people focus on work that drives promotions: strategy development, client insights, and above-quota performance. This clarity matters because leadership resistance often stems from anxiety over shifting power structures rather than technology itself. By 2027, most workers will need significant skill upgrades, so training must accompany every augmentation rollout through micro-learning sessions and peer mentoring that converts nervous energy into expertise.

Treat augmentation as true partnership where AI handles repetitive keystrokes while people do what only people can: build trust, spot opportunities, and close deals.

Step 4: Build the skeptic's governance framework

Treat governance like a seatbelt for your AI journey because clear structure keeps you safe, proves credibility to the board, and stops hype projects before they spiral into costly failures.

Start with an executive steering group where the CEO owns strategy alignment while finance, operations, and legal leaders meet monthly to review risk and impact metrics. Below that, establish an AI Center of Excellence staffed with data scientists, legal counsel, security experts, and frontline operators who turn strategy into daily guardrails. The third layer consists of a data ethics panel that reviews bias, privacy concerns, and model lineage before any system moves past pilot stage.

Even lean setups need written protocols that require audit logs for every model change, rollback plans tested quarterly, escalation paths naming accountable leaders, and change management leads who report progress to the steering group. Skeptics excel here because asking "What could go wrong?" comes naturally, and this instinct proves valuable since most AI adoption lacks proper oversight frameworks, creating risk for unprepared organizations.

Before signing contracts, interrogate vendors thoroughly on these critical areas:

- Proof of model provenance including training data sources and refresh schedules so you understand exactly what drives decisions

- Bias-testing evidence across real customer segments that demonstrates fairness and accuracy in your specific use cases

- Environmental impact metrics for training and inference that align with corporate sustainability commitments

- Service-level commitments for uptime and latency backed by financial penalties for underperformance

- A human contact who can trigger immediate kill switches when problems emerge rather than routing through support queues

Simple checklists prevent the "safety theater" that followed several high-profile model releases where bold marketing claims masked thin operational controls.

Prioritize transparency through plain-language explanations, feature reports, and opt-out paths for sensitive data processing. Follow this approach and skepticism becomes your competitive advantage because you'll identify weak controls long before they reach production systems, preserve customer trust, and clear the runway for projects that deserve to scale.

Step 5: Scale only what survives the hatred test

Before unleashing any pilot across the company, run the "Hatred Test": if the AI vanished tomorrow, would the business hurt? If not, terminate it immediately because this blunt question prevents shiny-object syndrome while forcing proof of lasting value.

Use three internal benchmarks for graduating systems to full scale: at least 3x ROI on measurable dollars saved or earned, fewer than 2% critical error rates in production, and employee satisfaction scores above +10. These targets may vary by organization, but anything falling short should return to development because hard metrics satisfy skeptical leaders who demand evidence over enthusiasm.

When numbers clear these bars, roll out through four deliberate phases where Pilot stage maintains isolated sandboxes with kill switches, Single department exposure widens the dataset while containing potential issues, Multi-region deployment stress-tests different workflows and regulatory requirements, and Enterprise integration connects with core systems and customer-facing processes. Companies that maintain rigorous testing standards and gradual rollouts see better adoption rates than those rushing to enterprise deployment.

Guard against "CAIO hero syndrome" where one executive attempts to manage entire expansions alone because distributed leadership models often outperform centralized AI ownership. Cross-functional teams catch governance gaps, balance risk appropriately, and spread institutional knowledge across the organization rather than creating single points of failure.

Premature scaling destroys AI projects because skipping intermediate validation leads to runaway costs and compliance surprises that can shut down initiatives permanently. Keep measuring, keep verifying, because systems that pass the hatred test become scalable assets.

Converting distrust into competitive edge

Treat skepticism like a coaching tool that forces every proposal to prove real value over marketing hype. Distributed leadership, business fluency, and balanced technology strategy separate winning programs from costly experiments.

Transform hesitation into momentum through these proven practices:

- Ban presentation demos until sandbox results exist because slides obscure the reality of implementation challenges

- Tie every budget dollar to specific profit-and-loss line items rather than vague productivity promises

- Involve employees in design from project inception to surface real workflow needs and build ownership

- Focus on workflow integration rather than standalone solutions that create more complexity than value

- Hold quarterly ROI reviews with authority to shut down underperformers before they drain resources

- Publish transparent governance metrics alongside technical performance indicators to maintain accountability

These disciplines separate winning programs from costly experiments while building organizational confidence in your approach.

Make skepticism social by scheduling monthly "AI Reality Check" sessions where teams critique new ideas against established standards. This routine surfaces blind spots early, reinforces shared expectations, and builds organizational discipline around constructive doubt while creating accountability loops that catch problems before they reach production.

Finance tracks spending against 90-day ROI targets, Legal reviews data usage contracts for compliance gaps, Operations validates that pilots work in real customer scenarios, and Data teams measure model accuracy weekly. Companies that meet clear ROI thresholds, maintain low error rates, and earn positive employee feedback turn caution into market advantage.

For email management specifically, this disciplined approach transforms how teams operate. Features like Auto Summarize let leaders gather context from long conversations within seconds, while Instant Reply provides smart draft suggestions that maintain personal tone and authenticity. Teams using comprehensive email management systems save 4 hours per person every week and handle twice as many emails through features that emerged from methodical development: contained pilots, measured ROI, systematic scaling.

Prerequisites and readiness assessment

Before launching any AI initiative, validate organizational readiness through this assessment by scoring each element from 0 (missing) to 5 (fully implemented) and calculating totals.

Essential readiness components include:

- Clean, documented datasets that won't cause embarrassing failures or compliance violations during pilot testing

- Written process documentation so everyone understands exactly what you're trying to improve and how success gets measured

- Executive sponsorship plus cross-functional teams including representatives from legal, operations, IT, and affected business units

- Budget for multiple pilots because initial attempts frequently require iteration or complete restarts

- Change management strategy so people embrace rather than resist new technology implementations

Scoring below 15 total points means pause and address fundamental gaps, while between 15 and 20 suggests readiness for one carefully contained use case. Hitting 20 to 25 indicates preparation for scaling successful pilots across broader organizational contexts.

Lightweight tools work effectively at this validation stage because spreadsheets for metric tracking, open-source models running in sandboxed environments, and simple kill-switch scripts cost almost nothing yet surface critical insights quickly. Success depends on connecting every pilot to clear objectives and measurable outcomes so results appear unambiguously.

Superhuman's email solutions started exactly this way by tackling narrow productivity problems, creating tight feedback loops, and delivering immediate measurable benefits. Companies like Brex and Deel now save significant time because the approach focused first on specific pain points, monitored performance closely, then expanded only after proving clear value.

Organizational readiness beats urgency every time because missing any prerequisite typically means wasted investment, so hold launches until comprehensive assessments indicate genuine preparation for success. Skeptical leaders who demand this discipline consistently outperform those who rush into AI initiatives without proper foundation work.

When email becomes your competitive advantage through tools like Split Inbox for intelligent prioritization, Auto Summarize for instant context, and comprehensive workflow automation, skepticism transforms into a strategic edge. The most productive approach to AI adoption starts with the most disciplined framework for testing and validation.