Your data scientists demo something impressive. Everyone nods. Six months later, nothing's changed except your budget is smaller and competitors are further ahead.

70-90% of enterprise AI projects die this way. Only 4 out of 33 prototypes ever make money. Every week you wait, someone else takes your market share.

Companies stuck in AI denial follow the same doomed pattern. They pilot forever, chase tiny wins, buy vendor promises, and watch competitors transform their industries.

The denial happens in stages. First, companies ignore AI completely. Then they enter pilot purgatory, running endless experiments that never reach customers. Next comes incrementalism, celebrating dashboard tweaks while revenue stays flat. Vendor seduction follows when executives buy roadmaps instead of results. Few reach real transformation where AI drives actual business outcomes.

You have 90 days to break free. Week 1 reveals what you can actually build versus what you pretend you can. Weeks 2-3 test vendors with your real data. Week 4 picks three tools maximum. The remaining days push one use case from concept to customer.

No more demos. No more delays. Real customers, real revenue, or move on.

Quick denial diagnosis

Test yourself. How many AI projects generated revenue this year? When did you last kill a pilot that wasn't working? What percentage of your AI budget goes to production systems versus experiments? Can your sales team explain how even one AI model helps them close deals?

Zero revenue-generating experiments last year means denial already costs you market share.

Most companies bounce through predictable failure stages. Some treat AI as conference entertainment that never touches customer workflows. Others run pilots that impress boards but never ship. Many celebrate small wins that competitors copy in weeks.

Successful companies deploy AI differently. Models find patterns in data as researchers. They explain complex information as interpreters. They suggest strategies as thought partners. They test scenarios as simulators. They engage customers as communicators. Every role connects to revenue or cost savings.

Only 4 out of 33 prototypes escape pilot purgatory. You need a different approach.

90-day framework from talk to production

Three months transforms slideware into shipped product. Four phases, no shortcuts.

Week 1 runs an honesty audit. You need 48 hours of unfiltered assessment covering data quality, technical debt, and team readiness. Politics and sugar-coating kill projects before they start.

Weeks 2 and 3 speed date vendors. Each gets 48 hours with your data, then one week in lightweight production. Real numbers reveal real value.

Week 4 forces a three-tool decision. Pick one intelligence layer, one automation layer, and one communication layer. Additional tools create complexity that prevents shipping.

Days 1 through 90 sprint toward one goal. Move a single use case from code to customer, or document why it failed. No extensions allowed.

The deadline prevents analysis paralysis. The infrastructure you build becomes next year's competitive advantage. Ninety days separates talkers from shippers.

Phase 1 honesty audit in Week 1

Clear 48 hours. Cancel everything else.

Pull six months of data tickets and outage reports. Map every dataset your use case needs, documenting who owns what and what's missing. List production systems needing integration. Survey employees anonymously about readiness and fears.

Start with data reality. Document missing fields, shadow spreadsheets, and privacy constraints. Check completeness, bias risks, and data lineage. You'll discover whether your data supports machine learning or whether you're dreaming.

Examine technical foundations. Review integrations, version control, and API health. Calculate engineering effort before committing resources.

Verify compliance status. Match datasets against industry regulations. Automated scanners catch problems before auditors do.

Political pressure will soften harsh realities. Analysis paralysis will inflate simple fixes into six-month projects. Counter both with anonymous feedback and executives who decide within 24 hours.

Share findings company-wide. Accept written feedback for one day. Lock the document. Start building.

Phase 2 speed dating vendors in Weeks 2 and 3

Two weeks separate vendors who deliver from those who deceive.

Give each vendor 48 hours with your sandbox data. Follow with one week in production behind feature flags. Measure business metrics like revenue lift and time saved. Track technical metrics like integration complexity and maintenance burden.

Score what matters. Hours to working prototype. Systems needing modification. Total cost by day 14.

Require vendors to demonstrate using your data. Anyone claiming predictive analytics must forecast using your historical records. Anyone promising automation must automate your actual workflows. This eliminates vaporware immediately.

Use a simple 14-day trial contract with clear performance thresholds. Below 5% improvement means termination. Review on days 2, 7, and 14. Decide on day 15.

Beautiful demos avoiding hard questions signal problems. Mysterious metrics hide poor performance. Roadmaps promising everything next quarter mean nothing today. Judge only live results with your data.

Phase 3 three-tool reality in Week 4

Three tools maximum. Tool sprawl kills momentum.

Structure your stack in layers. The intelligence layer creates predictions for pricing or forecasting. The automation layer executes decisions through robots or workflow scripts. The communication layer translates outputs for human understanding.

Each layer handles one job with clear handoffs. Evaluate through three filters. Does it connect easily to existing systems? Will it pay for itself within 90 days? Can you integrate without massive custom development?

Resist adding specialized tools for edge cases. Three focused layers create a stack your team understands, maintains, and improves.

90-day sprint execution

Day 1 starts with singular focus. Ship customer value or stop trying by day 90.

Days 1-30 define the battle. Choose one use case with measurable impact on revenue or costs. Find an owner who experiences the problem daily. Limit scope to data you control.

Create a one-page charter stating the baseline metric, naming the team, listing required integrations. Your executive sponsor removes blockers within 24 hours. The data lead guarantees pipeline quality. The product lead drives adoption and reports weekly.

Days 31-60 test and adjust. Weekly 30-minute reviews cover metric changes, current blockers, next experiments. Numbers improving? Accelerate. Stuck? Change approach, not goal.

Public kill criteria prevent zombie projects. No metric improvement by day 60 triggers review. Data blocks over two weeks force decisions. Regulatory concerns require immediate assessment. Hit any trigger, make the call.

Days 61-90 scale or stop. Beating baseline metrics with stable integration? Create deployment plan with architecture, support documentation, training materials. Falling short? Write three slides explaining what failed and why.

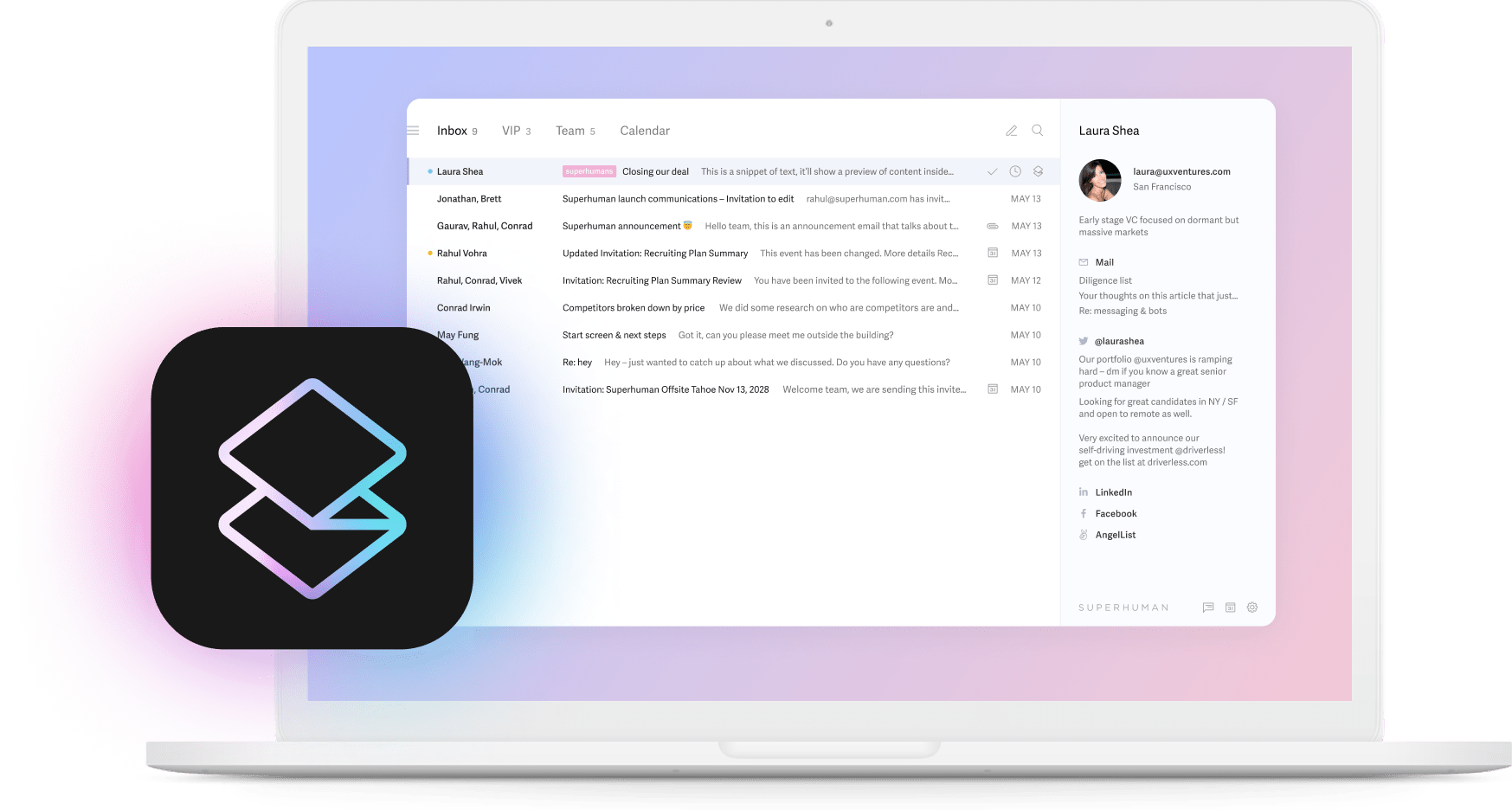

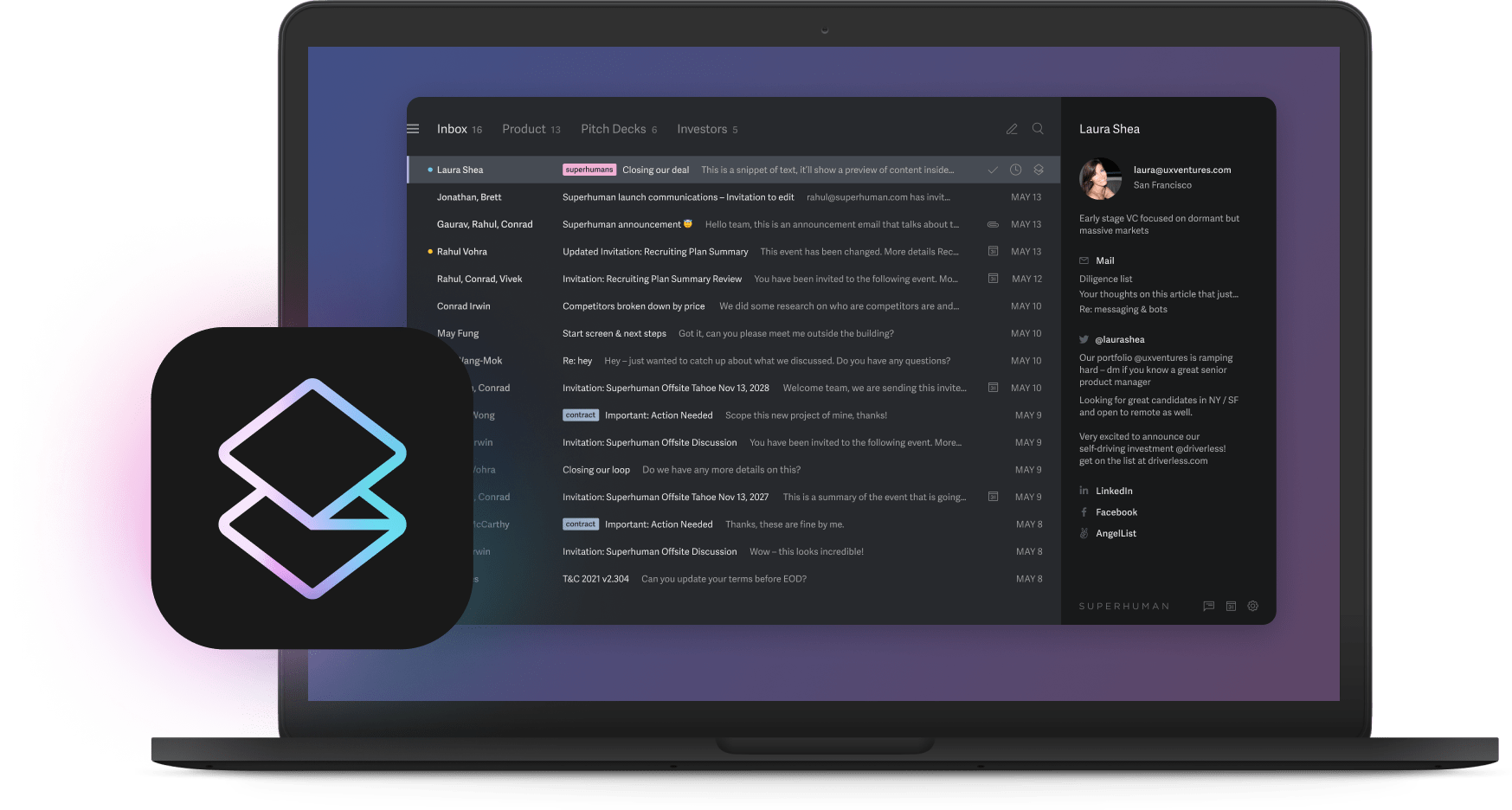

Publish metrics weekly where everyone sees them. Invite skeptics to demonstrations. Transparency builds trust and prevents endless pilot loops. Split Inbox demonstrates this principle, automatically organizing priorities so value becomes immediately visible.

Common denial patterns

Five behaviors predict failure.

Incrementalism celebrates tiny wins while revenue flatlines. Teams obsess over dashboard improvements that don't impact business outcomes. Fix by linking every sprint to revenue or cost metrics. Block new features until current ones deliver.

Innovation theater creates impressive demos for conferences, not customers. Require paying users within 30 days or kill the project. Reward shipped products, not presentations.

The perfect data excuse delays everything waiting for pristine datasets. Start with messy data. Document gaps. Improve gradually while maintaining momentum.

Vendor seduction happens when teams buy promises instead of proof. Test with your data first. Score vendors against objective criteria. Ignore roadmaps, judge current capabilities.

Consultant dependency outsources thinking while teams wait for recommendations. Keep consultants as advisors only. Employees own decisions, metrics, and execution.

Check weekly for these behaviors. Correct immediately. Denial compounds while competitors accelerate.

Real implementation patterns

Companies that successfully implement AI share documented approaches.

The dual transformation model improves current operations while building new capabilities simultaneously. Rilla's implementation enhanced existing sales processes while developing new customer insights capabilities.

Successful implementations follow strict timelines with clear metrics. UserGems set 90-day checkpoints that forced rapid iteration and prevented scope creep. They achieved measurable gains by limiting initial deployment to one defined use case before expanding.

The pattern repeats across case studies. Start with constrained scope. Measure obsessively. Kill failures quickly. Teams using focused AI features see significant time savings when implementation stays disciplined.

Troubleshooting objections

Three complaints appear immediately.

Data quality blocks progress? Every company starts with imperfect data. Use your cleanest subset. Add external data where helpful. Document problems for later. Keep building while improving.

Talent gaps worry leadership? Most teams don't need AI PhDs. Train current analysts through workshops. Bring specialists for specific challenges. Temporary expertise plus permanent ownership beats endless recruiting.

Regulatory concerns from legal? Make compliance part of design from day one. Week 1 mapping identifies requirements and assigns owners. Monthly reviews catch new regulations early. Auto Summarize shows how AI features maintain privacy while delivering value.

Teams fix within 24 hours or escalate to product leads. Product leads escalate to sponsors. Sponsors decide before next standup. Document decisions. Apply lessons immediately. This prevents the stagnation killing 88% of AI projects.

Choose now between denial and dominance

Most enterprise pilots die slowly in committees while competitors ship to customers.

This week, fund a 48-hour vendor test with real data. Within 30 days, publish your honesty audit to leadership. Include uncomfortable truths about data quality, cultural resistance, and technical debt. Include specific plans to address each issue.

Master dual transformation. Fix today's operations while building tomorrow's advantages. Companies succeeding at both turn AI from cost center to profit engine.

Your competitors already chose action over analysis. Denial compounds daily. Dominance requires starting now.

Your 90-day clock starts today.