Picture a company drowning in AI strategy meetings, unable to move beyond endless planning decks while competitors swiftly deploy new features and capture market share. This stark contrast shows how traditional AI strategy planning strangles innovation rather than nurtures it.

The risks of delaying implementation grow exponentially as AI advances at breakneck speed. While you're discussing hypothetical solutions in yet another meeting, competitors are launching features using nimble approaches, combining ChatGPT applications with quick prototypes that reach customers within weeks.

The experimentation-first approach replaces long planning phases with swift action, allowing teams to move from idea to execution in weeks instead of months. The secret lies in replacing cumbersome strategies with agile experimentation, focusing on minimal viable solutions to test in the marketplace. In the AI era, speed and adaptability are your greatest assets.

The brutal truth about your 200-page AI deck

Think about sitting in a boardroom as someone unveils a comprehensive 200-page AI strategy deck. The air buzzes initially with excitement and promise, yet as slide after slide passes, enthusiasm wanes. People become fatigued, minds numb with details, until the deck becomes an anchor weighing down progress.

Meanwhile, your competitor has picked up ChatGPT and successfully shipped new features to customers. When plans become labyrinths of approval and paperwork, they quickly fall behind technological advancements, leaving teams stalled in execution.

Extensive planning often masquerades as productive activity, leading to procrastination. While committees deliberate, nimble competitors seize the moment. This approach drains urgency and ownership from those who could otherwise drive swift, iterative changes. Misalignment among stakeholders creates disarray as teams juggle conflicting visions and priorities. Organizations often find their plans already outdated by the time they're ready to implement, having been surpassed by more adaptive approaches.

Evidence of the planning death spiral

Consider two companies on Monday morning. A five-person startup spins up a customer support bot with open APIs. Six weeks later the bot answers real tickets and the team improves sentiment scores. Their playbook relies on lean sprints, off-the-shelf models, and a willingness to kill ideas that don't move metrics.

Across town, a Fortune 500 giant remains mired in alignment meetings nine months after executives green-lit an AI initiative. A 200-slide deck has spawned three sub-committees, yet not a single customer has touched the product. The vast majority of enterprise AI projects never reach production, with leadership frequently citing complex governance and unclear ownership as culprits.

Why do heavyweights keep stalling? Silos create parallel models that solve the same problem, drain budget, and never share learning. Misaligned incentives mean nobody wants to admit a pivot is needed when promotions hinge on owning the strategy. Weak feedback loops track milestones instead of learning, so teams miss the tiny failures that prevent catastrophic ones.

The real cost of waiting

Those delays bleed cash as six-figure consultant invoices arrive before a single line of code reaches production. You could test the same idea tomorrow with a pay-as-you-go API that costs pennies. When pilots stall, the real expense becomes opportunity cost. While your deck circulates for sign-off, competitors automate workflows, delight customers, and widen their competitive moat.

Every quarter you wait, investor calls get tougher because your AI strategy still sits on the balance sheet instead of shipping value. Inside the company, frustrated employees turn to unsanctioned tools, creating a shadow AI economy that bypasses security and governance protocols. Companies where teams embrace new technology are 3x more likely to report significantly increased productivity. The invoice for inactivity includes sunk consulting costs, missed revenue, eroded talent morale, and brand perception that you're trailing the wave.

Ship, learn, kill, repeat

Forget the 12-month roadmap. Innovation moves faster when you work in tight loops that fit on a sticky note. The process begins with 24-hour idea capture. Set a timer, gather the smallest team that can ship code, and list every pain point worth solving. Rank ideas by potential impact and ease of testing. This quick sort creates focus and urgency.

Next comes the 72-hour prototype phase. Pull in off-the-shelf APIs or an open-source model and build something customers can touch. The key is moving from concept to demo in days, not quarters.

Before hitting run, establish explicit kill criteria by writing down the single metric that defines success. If the prototype can't move that needle by an agreed threshold, shut it down. This habit prevents zombie projects and frees resources for the next bet.

The cycle concludes with a weekly learning review, a 30-minute huddle to share results, note surprises, and decide whether to scale, tweak, or kill. No slide decks required. Teams using rapid experimentation deliver features sooner and pivot earlier, cutting wasted spend. Small cross-functional squads move faster than siloed departments because decisions happen in the room, not three layers up.

For legal compliance, confirm datasets are approved for experimentation, apply least-privilege access, and encrypt sensitive data. Ask whether the model could amplify bias or mislead customers. Set up automated monitoring for model drift and data pattern shifts. These guardrails clarify boundaries upfront rather than causing last-minute escalations.

Your five-day playbook

Day one focuses on framing the problem and building a small team. Pick one pain point you can describe in a single sentence, then grab three people who can build, understand the problem deeply, and make decisions. Small teams cut through approval cycles.

Day two involves checking your data and choosing a model. List what data you already have access to, identify gaps, and pick the fastest path forward. Off-the-shelf APIs usually beat custom training for prototypes.

Day three means building something that works once. Wire together a basic workflow using OpenAI Assistants, LangChain, Zapier, or Retool. Don't aim for perfection, just proof.

Day four brings testing with real people and fixing what breaks. Run your prototype with actual customers or stakeholders and note what works and what doesn't. Short cycles generate real learning instead of theoretical assumptions.

Day five is decision time. Hold a 30-minute meeting where if your prototype hits its success metric, you expand the audience. If it misses, document what you learned and move on. Both outcomes beat staying stuck in endless pilot mode.

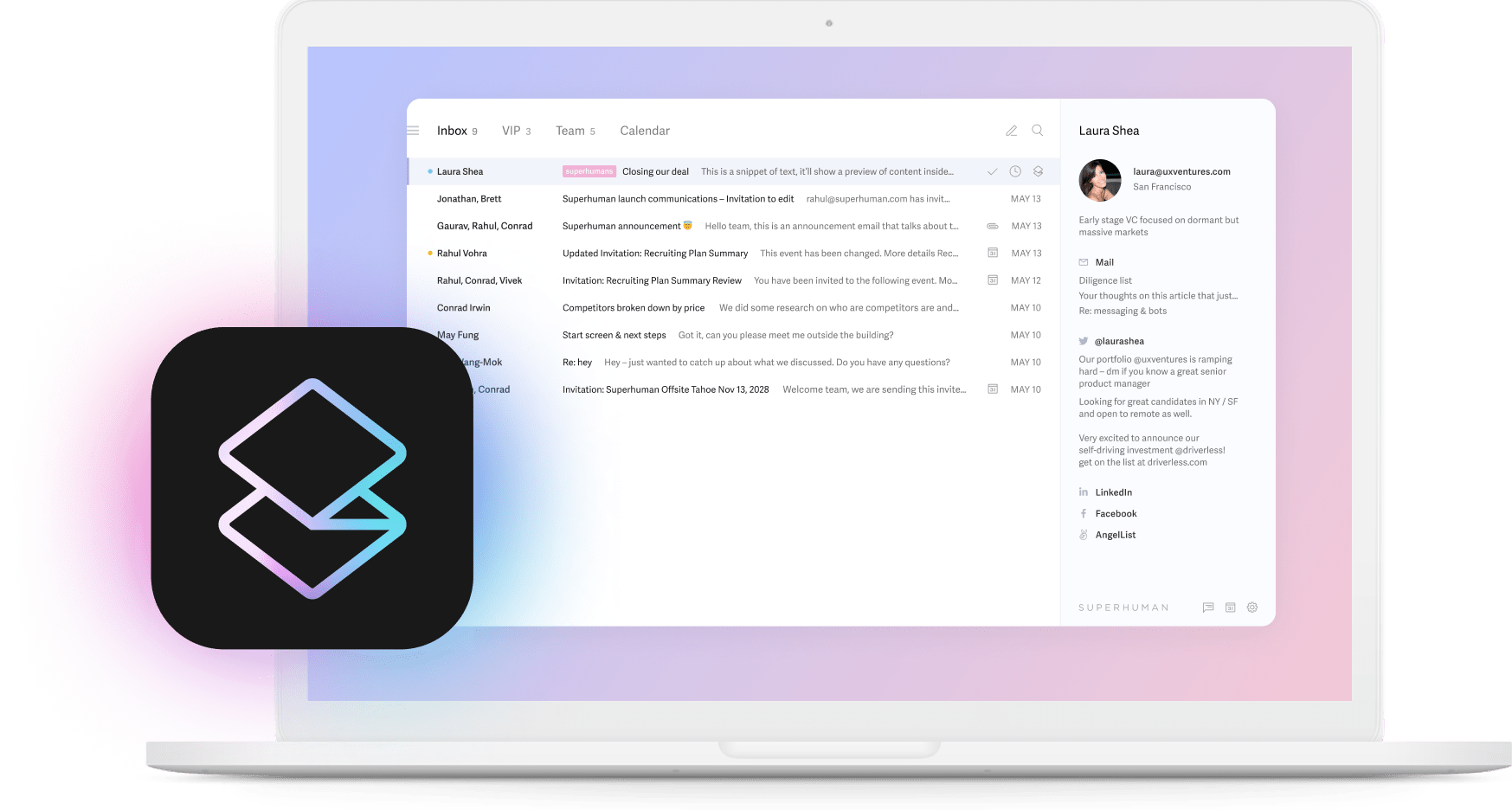

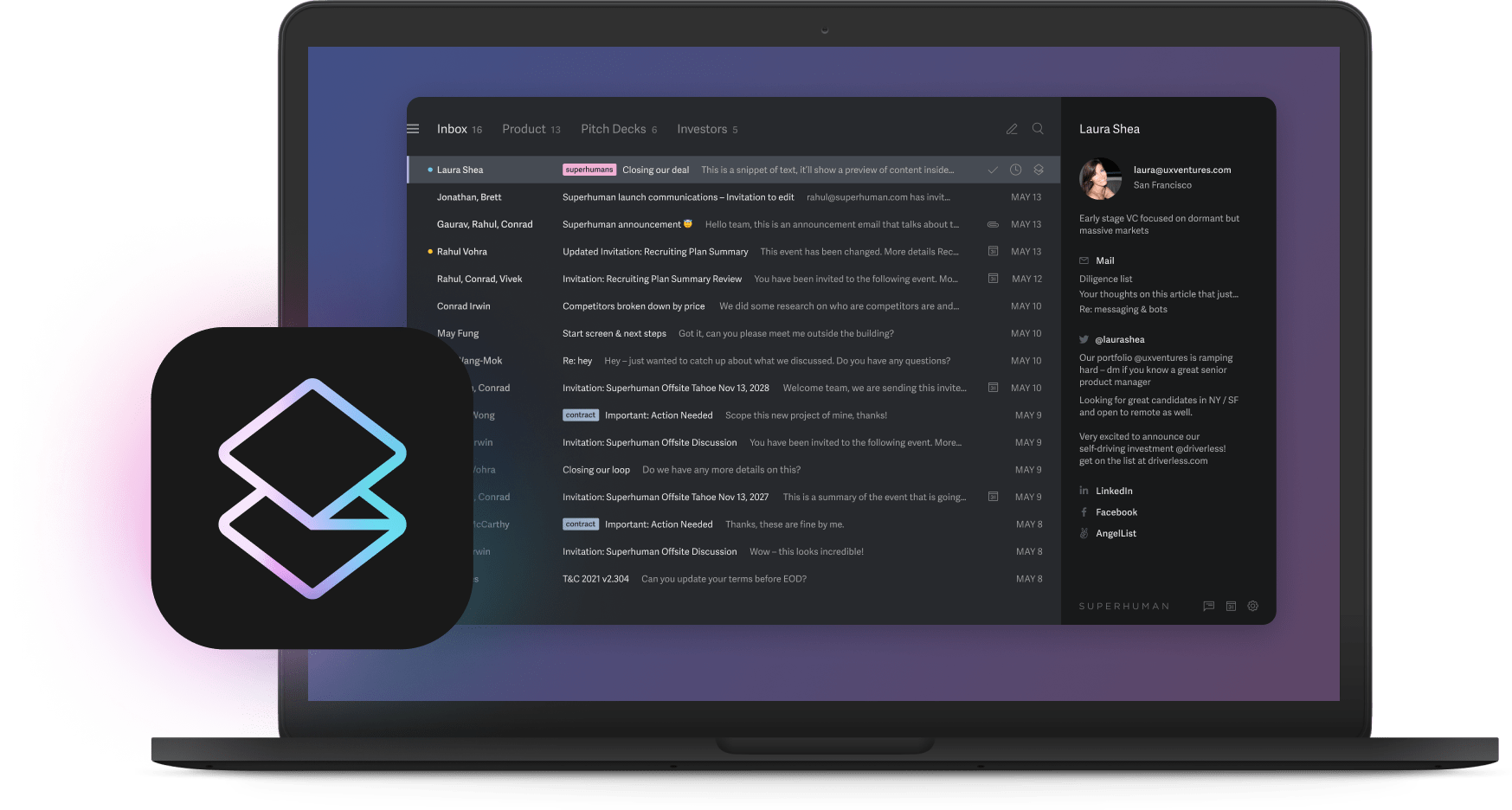

How Superhuman ships AI features before others finish planning

Superhuman has redefined email productivity by launching AI features within weeks rather than months. The company focuses on high-impact problems with small cross-functional teams that move quickly without bureaucratic overhead.

By prioritizing rapid testing with real users, Superhuman continuously iterates based on feedback. Each user saves 4 hours every week, adding up to over 15 million hours annually across all users. The AI learns from users' writing styles through Instant Reply, maintaining a consistent, personal tone that aligns with communication preferences.

Split Inbox organizes emails so users focus on what matters, helping spot high-priority messages instantly. Important emails from colleagues and executives never get buried under newsletters and notifications. Auto Summarize condenses long email threads into digestible insights, letting teams grasp context in seconds rather than minutes.

Teams using Shared Conversations collaborate directly within email threads, eliminating the need to forward messages or switch between applications. This rapid approach demonstrates how experimentation transforms user experiences while maintaining enterprise-grade reliability. The velocity comes from treating every feature as an experiment first, then scaling what works based on actual usage data.

Customer feedback drives continuous improvement rather than lengthy planning cycles. When users request features or report issues, Superhuman can prototype, test, and deploy solutions while competitors are still scheduling meetings to discuss possibilities.

Teams using Superhuman reply to twice as many emails in the same amount of time. They achieve inbox zero consistently. Most importantly, they spend less time on email administration and more time on work that drives their business forward.

Addressing concerns from perpetual planners

Every boardroom AI discussion ends with the same concerns. Governance worries dissolve when you build lightweight frameworks around ethical principles and automated model audits. Clear roles, real-time dashboards, and audit trails let you deploy fast while staying compliant.

Brand protection concerns are manageable when you start small. Ship to five percent of customers, monitor live metrics, and set clear kill switches. Controlled launches teach you more than they risk.

Technical debt fears dissolve with modular architecture. Feature flags and rapid A/B testing isolate new models from core systems. If something breaks, flip a switch instead of rewriting code.

Scale anxiety proves premature since most scaling problems only matter after you have users. Build something scrappy, prove people want it, then invest in infrastructure. Teams that succeed focus on value creation first and optimization second.

Fast-moving teams combine these safeguards with weekly check-ins where continuous monitoring catches issues before customers notice. Ship small, measure everything, kill quickly when needed, and double down when numbers look good.

The path forward starts today

Traditional AI strategy planning has become a liability rather than an asset. While you craft elaborate presentations, competitors ship working products. While you align stakeholders, they delight customers. While you mitigate theoretical risks, they learn from real-world feedback.

The solution isn't more planning but less. Cancel the next AI steering committee meeting and schedule a prototype sprint instead. Identify a small cross-functional team that can pivot quickly. Secure relevant data, starting small. Define clear success metrics. Choose appropriate AI capabilities for the task. Set predefined kill criteria.

This approach bypasses bureaucracy that strangles progress. Companies using AI save one full workday every week, but only if they stop planning and start building. B2B professionals expect AI to drive 3x productivity increases over the next five years. The companies that capture these gains won't be the ones with the best strategy decks but the ones with the most experiments under their belt.

Your competitors are already moving. While you schedule meetings, they ship features.

Send that meeting cancellation. Pick your pilot. Build something by Friday.