Your CFO just killed another AI proposal. You're not alone. Only 25% of AI initiatives have delivered expected ROI over the past three years. The technology works fine. The math doesn't.

You can change that. Start speaking cash flow instead of pitching technology. Map every AI opportunity to specific P&L friction. Build cost models that include the hidden expenses vendors never mention. Stage investments with kill switches. Package everything the way CFOs think, with NPV calculations and eighteen-month payback periods.

Finance teams using anomaly detection are identifying expense fraud patterns and saving millions. Numbers like that get attention.

The 5-minute CFO-first AI strategy

Your AI strategy fails at the CFO's desk because you built it on vendor promises instead of financial fundamentals. Fix that with five moves that finance teams understand.

First, find the cash drains. Look for friction points burning seven figures annually. Second, build the real cost model. Include data cleaning, change management, and those usage-tier price hikes that always come. Third, stage the spend through proof, pilot, and scale phases. Set kill-switch metrics at each gate. Fourth, neutralize portfolio risks like vendor lock-in and compliance exposure. Fifth, create a business case with conservative IRR and payback under eighteen months.

CFOs need three specific things. They want ROI, payback period, and total cost of ownership. Miss any of those, and your proposal joins the rejection pile. 38% of CFOs remain undecided about AI's cost versus risk, largely due to concerns about specialized talent and increased risk exposure.

The urgency comes from timing. Most AI proposals die within one budget cycle because they can't translate experiments into financial metrics. You need a week-by-week roadmap with explicit cost ceilings and hard-coded exit gates.

Step 1: Identify high-cost friction points and quantify the cash drain

Start hunting for problems that quietly drain at least $1 million annually. Check your P&L for chronic write-offs, ballooning overtime, or revenue stuck in slow processes.

Connect each friction point to a financial line item. Pull metrics from systems you already own. Your ERP shows how many days transactions sit before booking. Customer churn percentages translate to lost gross margin. Days sales outstanding reveals cash trapped in receivables. Help-desk backlogs expose productivity losses.

These baseline numbers matter. Finance teams won't fund anything without a clear starting point. CFOs back AI when the business goal is explicit and measurable.

Pull twelve months of ERP, CRM, and service-desk data. Rank friction points by annual cash impact, prioritizing anything above $1 million. Document baseline metrics like cycle time, error rate, and labor hours in one spreadsheet. Confirm with Finance that numbers tie to the P&L.

Score each pain point by cash drain per month. A $100,000 monthly bleed beats a $20,000 problem, even if the smaller one seems more innovative. This financial triage keeps you focused on value the CFO can measure and defend.

Step 2: Build the true cost model without vendor fog

Your budget unravels when you ignore costs that never appear in vendor demos. CFOs know this, which explains why proposals stall once Finance sees the real numbers. Measuring AI value proves tricky when expenses hide.

List every line item touching cash, not just licenses. Common blind spots include data cleaning, system integration, model retraining, change management, and usage-tier price hikes. True investment analysis factors in training and support across the entire lifecycle.

Build a transparent TCO table showing realistic projections. Year 1 typically includes data preparation, integration costs, cloud consumption, training programs, change management, and ongoing support. These commonly reach $250,000 to $300,000 for mid-size deployments. Year 3 projections often climb 50% to 60% higher as usage scales and complexity grows.

Keep formulas transparent so Finance can audit assumptions. Budgets rarely stay flat. Consider capped escalation clauses, milestone payments, and internal chargebacks to control costs. Walk into the review with your TCO sheet first. Highlight hidden costs you've surfaced. Show how every number flows into payback calculations.

Step 3: Craft the staged investment plan

Structure investments in three stages with defined budgets and timeframes. Each phase builds on previous success while controlling risk.

Stage 1 focuses on proof of concept over 90 days. Budget typically ranges from $200K to $300K depending on scope. You demonstrate feasibility without major commitment. Success criteria should include model accuracy thresholds, user adoption metrics, and budget variance limits.

Stage 2 extends to pilot phase over 180 days. Budget scales to $500K to $1M for controlled operational testing. The focus validates approach at limited scale while building organizational confidence.

Stage 3 moves to full deployment over 12 to 18 months. Budget depends on pilot results but commonly ranges from $2M to $5M for enterprise-wide rollout. Expansion must be managed carefully to prevent scope creep.

Research shows phased approaches improve success rates significantly. Establish kill-switch criteria throughout. Set minimum adoption rates, cost overrun caps, and accuracy thresholds. Any stage can stop if metrics slip, protecting the overall investment.

Step 4: De-risk with CFO-ready mitigation

Your CFO won't approve anything without clear downside protection. Score every initiative on a 3×3 heat map plotting probability against financial impact. Red demands immediate controls. Yellow needs monitoring. Green can wait.

Address five critical threats. Vendor lock-in gets neutralized through multi-cloud contracts with 60-day redeploy clauses. CFOs value exit options. Price escalation stays controlled through usage caps and performance-tied payments.

Implementation failure gets contained by gating funding through phases. Set clear criteria for cost overruns and adoption rates. Adoption resistance requires budgeting upskilling early. Strong cross-functional collaboration, including finance, operations, and data science input, drives change management success.

Regulatory exposure demands embedding data governance with role-based access, audit trails, and model explainability before launch. Transparent controls reduce compliance risk. 78% of finance chiefs are concerned about cybersecurity threats impacting financial operations in 2025.

Present your portfolio with the heat map first. Walk through each mitigation, its cost, and residual exposure. Close with a one-page summary showing measurable impacts and operational safeguards.

Step 5: Package the business case your CFO wants

CFOs approve AI when numbers, risks, and exits fit on one page. Structure your case like any capex request, then add AI nuances they watch for.

Start with five sentences covering total investment, conservative return range, payback period, and three biggest mitigated risks. Show upside and downside using 20/60/20 sensitivity bands. Twenty percent probability of lagging, sixty percent hitting plan, twenty percent beating. Include break-even month and cash-flow curve. Your base case must clear hurdle rates even if adoption stalls or costs creep.

Add exit strategy with gates at months 3, 6, and 12. Show when you'll cut losses if adoption sits below 60%, overruns top 15%, or accuracy drops under 70%. Attach vendor unwind plans showing liability ceilings.

Connect benefits to metrics finance tracks. Cost reduction, working capital gains, and margin lift matter. Show how AI improves decision quality and cycle time. When the model, risks, and off-ramps are this clear, approval becomes straightforward.

Success metrics and reporting cadence

Separate leading signals from hard outcomes and report them with finance calendar rhythm. Review leading indicators monthly. Track model accuracy, user adoption, and cycle-time reduction. Report lagging indicators quarterly. Measure cost savings, incremental revenue, and working capital gains.

Map every metric to P&L lines. Faster invoice matching cuts DSO, which shows in working capital. Better forecast accuracy improves inventory turns, lifting gross margin. 85% of CFOs identify forecasting accuracy as a primary area needing improvement.

Build one dashboard. Top pane tracks monthly metrics with red-amber-green flags. Bottom pane shows quarterly financial outcomes matching board pack format. Add toggles for best, base, and downside scenarios.

Publish before regular close reviews. Walk your CFO through variances and fixes. Adjust tracking as projects mature. Pilots focus on accuracy and adoption. Scale shifts to cash savings and margin lift.

Proven implementation patterns

Organizations succeeding with AI share common patterns. They start with narrowly defined use cases, maintain strict financial discipline, and scale only after proving value. More than 80% of organizations aren't seeing tangible impact on enterprise-level EBIT from their use of gen AI yet, which reinforces the need for careful implementation.

Demand forecasting remains a popular starting point because results are measurable and ROI is clear. Companies typically see inventory reductions of 10% to 15% while maintaining or improving fill rates. The key is starting with one distribution center or product line before expanding.

Conversely, chatbot implementations frequently fail when accuracy falls below 70%. The lesson learned across industries is that kill-switch thresholds must be enforced regardless of sunk costs. Projects that miss early metrics rarely recover.

Finance teams can leverage tools like Auto Summarize to quickly document and share lessons learned across the organization, ensuring knowledge transfer between projects.

CFO conversation playbook

When you walk into that meeting, lead with value. Say "Even worst-case, this project's NPV stays positive after fourteen months." You've just framed everything around cash, not hype.

The first pushback usually sounds like "We tried AI before." Keep your response simple. "Staged gates cap downside at $250K. If adoption, cost, or accuracy slip, we shut down before real money leaves."

When they question your ROI projections, slide over your sensitivity table showing 20/60/20 bands. Base case 28%, pessimistic 7%, optimistic 42%. Link each scenario directly to operating cash flow.

Keep vocabulary familiar throughout. Talk capex versus opex, working capital, payback periods. Skip "transformative neural architecture." Translate accuracy into DSO reduction or fraud avoidance.

Address silent worries before they ask. Data integrity gets protected since pilot pulls only validated ERP data with audit trails intact. Model reliability stays assured through predefined accuracy benchmarks gating funding. Compliance gets maintained as models log every decision for SOX review.

Close by asking what additional comfort they need for Stage 1. Stay on numbers, speak CFO language, leave with a clear path forward.

Your path forward

The best time to start is this week. Identify and quantify your biggest pain points, then gather current cost data to draft your initial TCO. Next week, lock down your Stage 1 scope, define kill-switch criteria, and secure training budgets. Before meeting your CFO, build your 20/60/20 sensitivity sheet, map all metrics to P&L lines, sketch your risk heat map, and prepare your exit strategy brief.

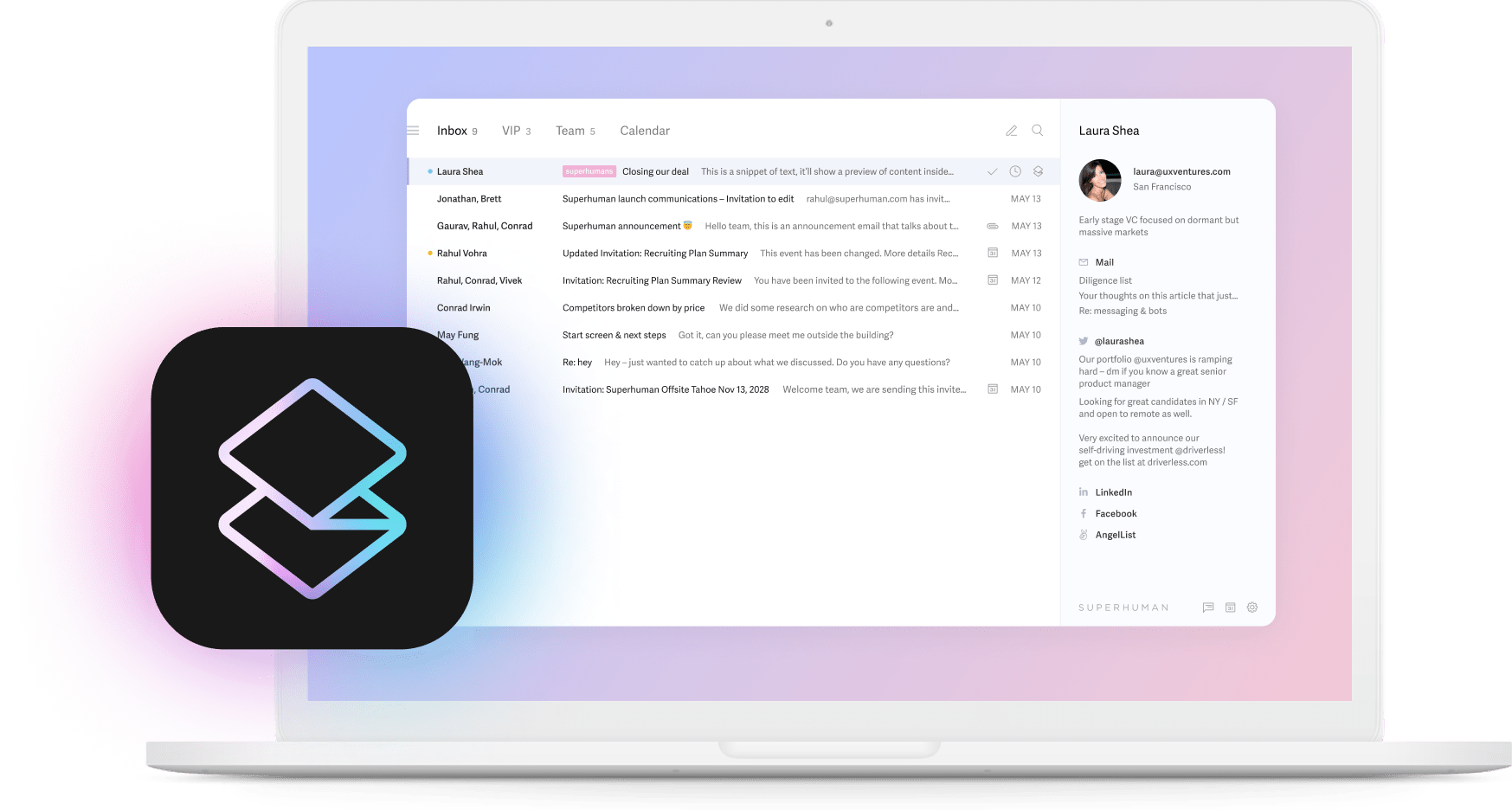

Industry-leading companies are 3x more likely to report significant productivity gains from AI. Teams using Superhuman save 4 hours weekly through AI-powered features. Your finance team can see similar results when AI implementation follows proven financial frameworks.

Show the math, control the risk, stage the investment. Your enterprise AI strategy passes the review that matters most when you speak the language of finance from the start.