Here's the uncomfortable truth: 78% of organizations use AI in at least one function, yet only 1% call themselves truly AI mature. The gap isn't about technology, it's about measurement.

Leaders who track real usage see 3x productivity gains while everyone else throws money at tools and hopes for the best. 92% plan to increase AI investments over the next three years, but most can't prove what they're getting back.

The measurement playbook that separates top performers from everyone else isn't complicated. Every dollar you spend on AI can turn into visible results if you know what to track.

What are AI adoption metrics?

AI adoption metrics are quantitative measurements that track how organizations implement, use, and derive value from artificial intelligence technologies. These metrics measure user engagement rates, feature utilization patterns, workflow automation percentages, and business outcomes directly attributable to AI implementation.

Unlike traditional IT metrics that focus on system uptime or license counts, AI adoption metrics specifically capture the depth of integration and actual value generation from AI tools across different departments and use cases.

Think of these metrics as scorecards that show whether the AI-native tools in your company are moving the needle. Traditional digital transformation KPIs focus on rollouts and uptime. Machine learning metrics dig deeper, tracking daily active employees, feature interaction, and the share of workflows AI now automates.

That shift from deployment to depth matters because owning a model or chat interface is very different from squeezing measurable results out of it.

A solid measurement framework links every AI action to a business outcome. If sales reps close deals faster, marketing drafts campaigns in hours instead of days, or engineers ship code with fewer bugs, the metric should capture that lift and tie it to revenue or productivity.

Without this chain of evidence, AI spend turns into expensive shelfware.

Core AI adoption metrics for enterprise leaders

If you can't measure how your team uses AI, you can't improve it. Four types of metrics give you a clear picture of what's working, what isn't, and where you're seeing business value.

Key metric categories to track:

- Usage penetration: Daily active users versus monthly ones, department-by-department adoption rates, and feature utilization patterns

- Engagement depth: Time saved on core workflows, number of daily interactions per user, and percentage of power users

- Business impact: Revenue contribution, response time improvements, and product development cycle reductions

- Quality outcomes: Error rate reductions, customer satisfaction improvements, and compliance accuracy gains

Usage penetration helps you understand reach across your organization. Track how many employees in each department open an AI tool every day. The numbers tell a different story department by department, with engineering teams often hitting 90% adoption while finance hovers around 40%.

Engagement depth reveals how thoroughly teams work with these tools. Monitor which features people use, how many interactions each person has daily, and how much time they save on core workflows.

Business impact metrics connect AI use to outcomes. Financial services leaders link AI-guided trading to higher win rates. Professional services firms often see faster client turnaround, sometimes upwards of 30% or more, once they automate document review with tools like Auto Summarize.

Quality outcomes track accuracy and satisfaction improvements. Lower error rates in credit scoring, higher customer support satisfaction scores, fewer defects per thousand lines of code when developers use AI assistance confirm that speed gains don't sacrifice experience or compliance.

Many companies combine these signals into an AI maturity index covering strategy, infrastructure, culture, and governance. When you bring all four metric types into one view, raw usage data transforms into a roadmap for higher productivity and faster growth.

AI adoption benchmarks by sector: Tech, professional services, and finance

Software engineering leads the charge with 51% adoption of code copilots, establishing developers as AI's earliest power users. GitHub Copilot's rapid ascent to $300 million in revenue validates this momentum, while emerging tools like Codeium and Cursor expand the ecosystem.

Marketing follows closely, with 53% of organizations reporting it as their fastest-adopting department, where GenAI transforms content creation, customer insights, and campaign optimization at unprecedented speed.

Finance sits more conservatively at 40-45% adoption for traditional AI operations, focusing on high-stakes applications like fraud detection, risk modeling, and compliance workflows.

While 70% of CFOs plan GenAI investments for 2025, current implementation lags behind technical departments. Early adopters are seeing dramatic efficiency gains in repetitive tasks like reconciliations, reporting, and transaction processing.

The department-level disparities reveal significant untapped potential. Software engineering's 51% adoption compared to finance's 40-45% represents not just a gap, but an opportunity. Marketing's rapid acceleration demonstrates what's possible when departments embrace AI beyond cautious pilots.

Even within organizations claiming strong AI adoption, these variations suggest pockets of unrealized value, particularly in risk-averse functions that could benefit most from automation's precision and scale.

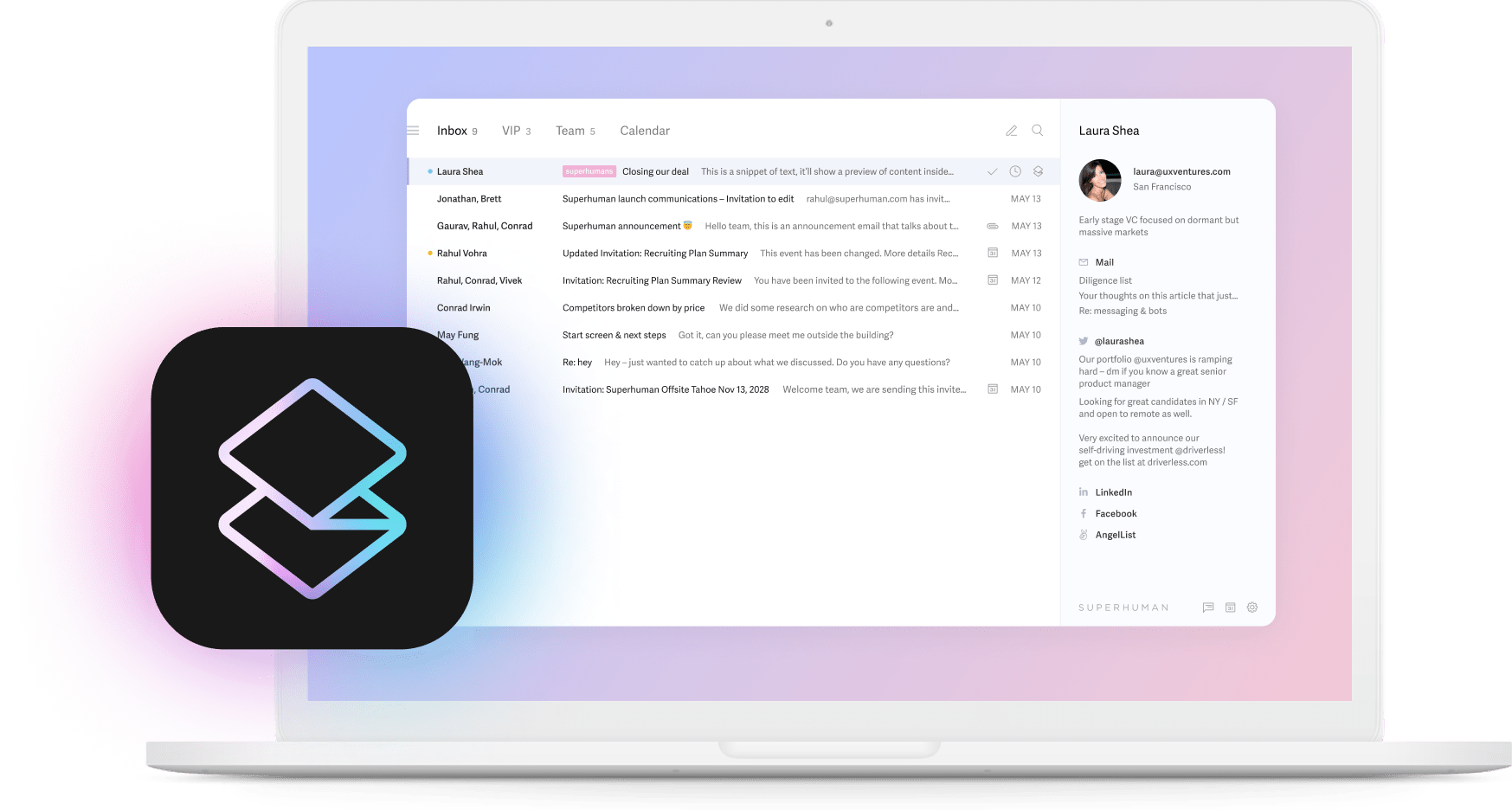

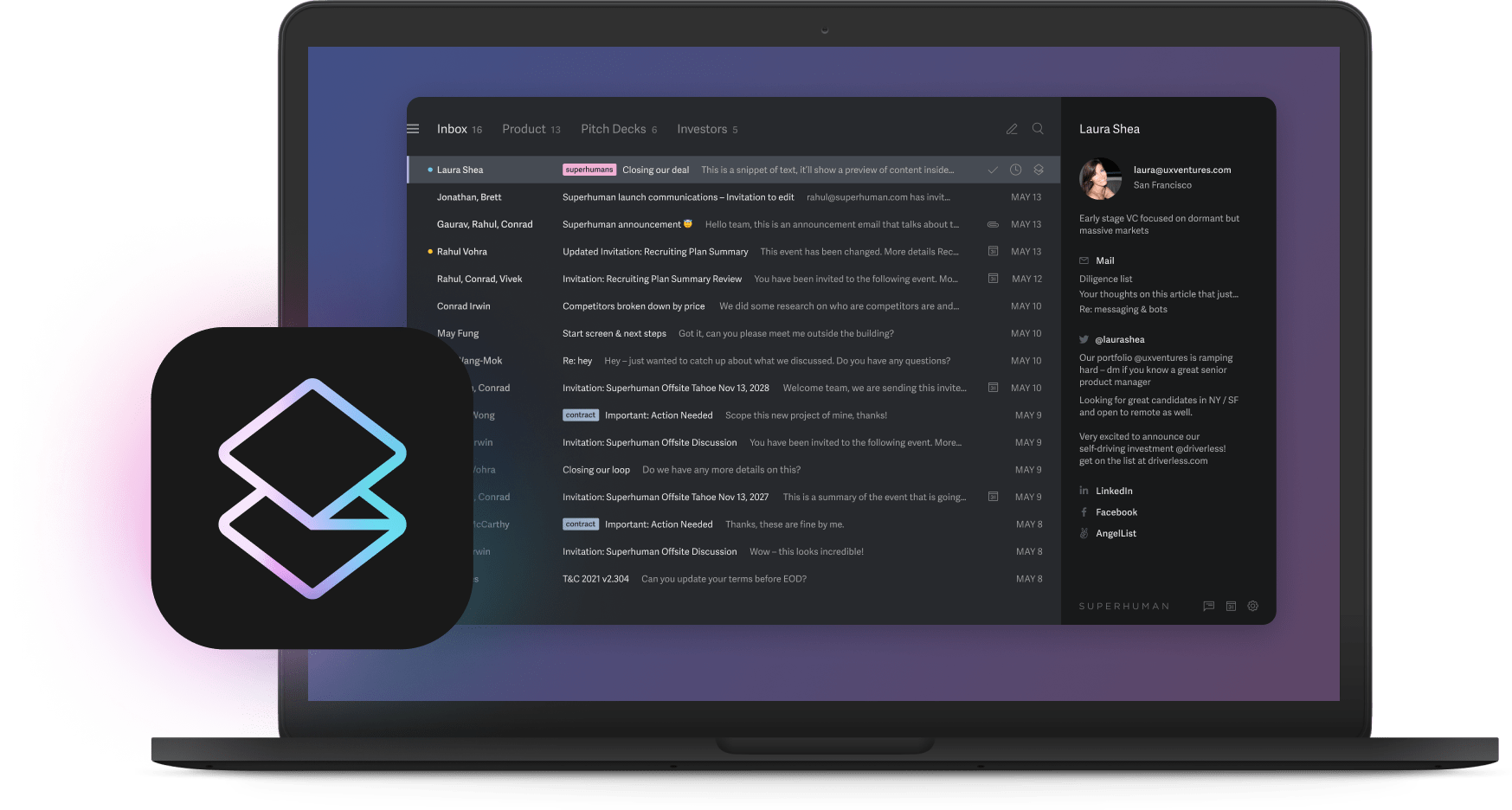

Industry-leading companies are 3x more likely to report significant productivity gains from AI. Case in point are Superhuman customers who use AI saving 37% more time than those who don't, and they respond to email twice as fast.

For leaders, sector benchmarks aren't scorecards; they're roadmaps for directing training, automation, and cultural change.

Challenges leaders face in tracking AI adoption

You can pour millions into AI tools and still struggle to prove any return. Five hidden obstacles keep even ambitious programs from showing real numbers.

Common measurement obstacles:

- The measurement paradox: Most companies can't tell if increased spending boosts productivity

- Data fragmentation: Usage information scattered across tool logs, finance systems, and shadow apps

- The adoption-impact gap: Teams have access but actual daily usage stays low

- Cultural resistance: Employees feel under-supported with training and lack necessary skills

- Competitor pressure: Rivals iterate faster while you struggle to measure progress

The measurement paradox hits first. Without clear success metrics, your dashboards stay empty and wins remain just stories people tell. Usage information gets scattered across tool logs, finance systems, and shadow apps your team installed without IT approval.

These data silos make it nearly impossible to see who's using what, how often, and whether it's making any difference.

Most teams can access AI tools, but actual daily usage stays low. Many departments only touch these expensive tools occasionally, turning your software investment into expensive shelfware.

Tools like Split Inbox in Superhuman help teams prioritize what matters most, ensuring AI features get used consistently rather than forgotten.

Cultural resistance makes everything harder. Some employees feel under-supported with training, and many admit they lack the skills to make artificial intelligence work for them. This skill gap creates convenient excuses when projects stall or leaders blame technical issues instead of addressing the real problem.

Your rivals iterate on AI strategies faster than you can measure your own progress. Only 39% of C-suite leaders have formal AI benchmarks, so most companies only discover they're falling behind when market share starts slipping.

Solutions: How leaders can effectively track and improve AI adoption

Bottom line: you can't improve what you can't see. Smart investments only pay off when every team's engagement, impact, and risk profile show up in a single, reliable view.

Start by wiring that view together. A unified dashboard pulls daily active users, power-user ratios, feature usage, and business outcomes into one place. Then, rely on AI-native tools that ship with built-in analytics rather than bolted-on reports.

Before scaling, run controlled pilots with clear KPIs. Pick a high-impact workflow, capture baseline cycle time, and set a target improvement, say, a 25% reduction in ticket resolution or a 30% boost in content output.

Track results weekly, then roll successful playbooks to adjacent teams. Shared Conversations in Superhuman lets teams collaborate directly on emails, making it easy to track how AI helps teams work together more efficiently.

Implementation roadmap (30-60-90 days):

- Days 1-30: Inventory every tool, light up logging, and publish baseline metrics

- Days 31-60: Launch pilot programs, appoint champions, and embed usage targets in team OKRs

- Days 61-90: Expand dashboard to include ROI metrics and compare adoption velocity to peer benchmarks

Turn your power users into champions. In top-performing technology firms, power users already qualify as true advocates, spending significant time daily with AI tools. Promote these early adopters, give them measurable coaching objectives, and pair them with lagging departments. Peer coaching beats slide decks every time.

Create a feedback loop between adoption data and enablement. When the dashboard shows low feature engagement, push targeted micro-learning or live workshops instead of generic webinars.

Track adoption velocity, not just adoption rate. Leaders refresh features twice as fast as the median. Benchmark the time between a new release and meaningful usage. Shorten that lag and the productivity gap narrows automatically.

Don't ignore governance. Over half of companies have yet to update security measures for these tools. Bake data-loss prevention, anonymized reporting, and compliance audits into the dashboard from day one. Transparent guardrails protect privacy and build trust, keeping adoption high.

The strategic value of AI adoption metrics

Companies with systematic measurement are projected to be significantly more efficient than those still guessing by 2026.

Most professionals expect AI to drive at least a 3x increase in productivity within 5 years, but only those who measure will capture that value.

You've already invested in these tools. Now it's time to measure them. What gets measured scales, and what scales wins the game. The best teams with the best tools will lead the revolution in work, and measurement is how you ensure you're one of them.

Ready to see what AI-native email can do for your team's productivity? Start your Superhuman trial today and join the companies saving 4 hours per person every week.